Passionate about creating compelling visual stories through the analysis of big data, Chloe Maraina is our Business Intelligence expert with an aptitude for data science and a vision for the future of data management and integration. Today, she unpacks the latest advancements in enterprise storage,

Australian retail conglomerate Wesfarmers has initiated a landmark strategic partnership with Google Cloud, signaling a profound shift in how artificial intelligence will shape the future of commerce. This collaboration is set to deploy Google's advanced Gemini platform across Wesfarmers' extensive

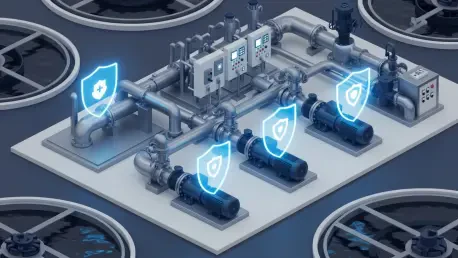

The silent hum of a water treatment facility's control room belies a vulnerability of national significance, where a few lines of malicious code could potentially shut off clean drinking water for millions of Americans. This stark scenario is no longer a work of fiction but a driving force behind a

As a Business Intelligence expert with a passion for transforming big data into compelling visual stories, Chloe Maraina has a unique vantage point on the infrastructure powering the AI revolution. With data science at her core and a clear vision for the future of data integration, she joins us to

The monumental task of broadcasting the Olympic Games to a global audience has historically relied on sprawling, hardware-intensive operations, but a profound technological shift is now rapidly reshaping this landscape from the ground up. Olympic Broadcasting Services (OBS) is at the forefront of

In the highly competitive Software-as-a-Service (SaaS) landscape, the ability to deliver insightful, seamlessly integrated analytics is no longer a luxury but a critical differentiator that directly influences user retention and product value. For the second consecutive year, Qrvey, a company