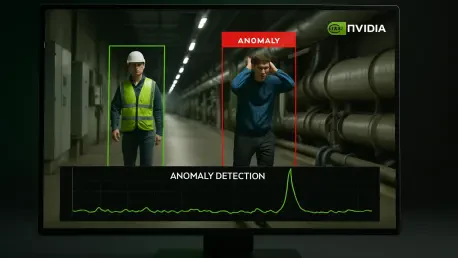

In today’s fast-paced industrial landscape, the ability to pinpoint anomalies in vast streams of data is no longer a luxury but a necessity, as missing a critical deviation can lead to catastrophic consequences in sectors ranging from healthcare to aerospace. Imagine a hospital intensive care unit overwhelmed by incessant false alarms, or a spacecraft mission jeopardized by an unnoticed glitch in telemetry data. These scenarios highlight the urgent need for reliable anomaly detection, a process that identifies unusual patterns or events in time-series data to ensure safety, efficiency, and operational continuity. NVIDIA’s NV-Tesseract-AD emerges as a groundbreaking solution, designed to navigate the messy, unpredictable nature of real-world data with unparalleled precision. This advanced model, an evolution of its predecessor NV-Tesseract, promises to redefine how industries address the persistent challenges of detecting true anomalies amidst noise and complexity. By leveraging cutting-edge techniques, it tackles issues that have long plagued traditional methods, offering a lifeline to operators drowning in irrelevant alerts or struggling to catch rare but critical events. The significance of this technology lies not just in its technical prowess but in its potential to transform decision-making across high-stakes environments. From reducing alarm fatigue in medical settings to safeguarding mission-critical systems in space exploration, NV-Tesseract-AD stands poised to deliver actionable insights where they matter most. This article delves into the core innovations, real-world applications, and future possibilities of this remarkable tool, shedding light on its role in shaping the next era of data-driven reliability.

Understanding the Challenges of Anomaly Detection

Tackling the Mess of Real-World Data

Why Time-Series Data Is So Tricky

Time-series data, the primary focus of NV-Tesseract-AD, presents a unique set of hurdles due to its dynamic and often erratic nature, making anomaly detection a formidable challenge across industries. Unlike static datasets, this type of data evolves over time, influenced by factors such as equipment degradation, human behavior, or shifting operational conditions. For instance, a semiconductor sensor might gradually drift as it ages, while a patient’s vital signs fluctuate with daily activities or stress levels. These changes, known as non-stationarity, mean that what is considered “normal” today may not be tomorrow, complicating the task of identifying true deviations. On top of that, noise—random or irrelevant variations—often obscures meaningful signals, and labeled anomalies for training models are scarce and expensive to obtain. Even when expert annotations are available, they can be inconsistent, leading to unreliable outcomes. NV-Tesseract-AD steps into this fray with a design tailored to handle such complexities, aiming to discern critical patterns where traditional tools falter under the weight of ever-shifting data landscapes.

The Persistent Data Drift Dilemma

Beyond non-stationarity, the phenomenon of data drift adds another layer of difficulty, as underlying patterns can shift due to external or internal changes, rendering static models obsolete. In aerospace, for example, spacecraft telemetry varies across different mission phases, while in manufacturing, machinery wear alters sensor readings over months. These shifts are not anomalies themselves but can mask genuine issues if a system fails to adapt. Compounding the problem is the scarcity of ground-truth data, as real anomalies are rare events, and collecting enough examples to train robust models is often impractical. False positives, where normal variations are flagged as problems, further erode trust in detection systems, especially in high-stakes settings where every alert demands attention. NV-Tesseract-AD addresses these concerns by prioritizing adaptability, ensuring it can evolve with changing data patterns without being tripped up by natural variations or overwhelmed by the lack of labeled examples, setting a new benchmark for resilience in anomaly detection.

Shortcomings of Older Methods

Limitations of Traditional Statistical Tools

Traditional statistical methods, once the backbone of anomaly detection, often fall short when confronted with the realities of modern industrial data, particularly under conditions of drift or noise. These approaches typically rely on assumptions of data stability, expecting consistent patterns that rarely hold true in dynamic environments. When equipment ages or operational contexts shift, such as in cloud systems experiencing sudden traffic spikes, these models fail to adjust, resulting in either missed anomalies or a barrage of false alarms. Their inability to handle multivariate data—where multiple variables interact—further limits their effectiveness in complex scenarios like monitoring spacecraft with thousands of data channels. This rigidity has long frustrated operators who need tools capable of navigating real-world unpredictability, highlighting the urgent demand for more flexible solutions that NV-Tesseract-AD aims to provide through its innovative framework.

Struggles of Early Deep Learning Models

Even early deep learning attempts, including the initial version of NV-Tesseract-AD, encountered significant obstacles when applied to noisy or univariate datasets, often yielding unreliable or trivial results. Tested on challenging public datasets like Genesis and Calit2, these models struggled to distinguish meaningful anomalies from background clutter, especially when data lacked clear patterns or sufficient labeled examples. Their performance suffered in environments with high noise levels, leading to missed critical events or excessive alerts that overwhelmed users. This exposed a critical gap in handling the intricacies of time-series data, particularly in multivariate contexts where interactions between variables add layers of complexity. The evolution to NV-Tesseract-AD 2.0 reflects a deliberate effort to overcome these early shortcomings, incorporating advanced techniques to ensure greater robustness and reliability across diverse industrial applications.

Innovations Powering NV-Tesseract-AD

Cutting-Edge Techniques for Better Detection

Diffusion Modeling for Normalcy

Central to the transformative power of NV-Tesseract-AD 2.0 is diffusion modeling, a technique originally honed in image processing but ingeniously adapted for time-series anomaly detection. This approach operates by iteratively corrupting data with noise and then reconstructing it, enabling the model to deeply understand what constitutes “normal” behavior within a dataset. By learning this baseline, even subtle deviations—such as a minor irregularity in a patient’s heartbeat or a slight voltage drift in satellite telemetry—become detectable as potential anomalies. This granular insight sets the model apart from earlier methods that often overlooked faint but critical signals amidst noise. The ability to map out a normal behavior manifold ensures that the system can flag deviations with a high degree of accuracy, making it invaluable in environments where precision is non-negotiable and every anomaly could signal a looming crisis.

Refining Detection with Generative Precision

Diffusion modeling in NV-Tesseract-AD goes beyond basic pattern recognition by leveraging generative AI principles to enhance its detection capabilities across varied contexts. Unlike discriminative models that merely classify data points, this generative approach builds a comprehensive picture of typical data distributions, allowing for a nuanced understanding of temporal patterns. For instance, in a manufacturing setting, it can discern whether a sensor reading’s shift is a harmless drift due to wear or a sign of impending failure. This depth of analysis reduces the risk of both false positives and negatives, addressing a long-standing pain point in anomaly detection. By focusing on reconstructing normalcy, the model equips industries with a tool that not only identifies outliers but does so with a contextual awareness that mirrors human judgment, paving the way for more informed and timely interventions.

Curriculum Learning for Stability

Building Robustness Step by Step

Training a model on the chaotic, noisy data typical of industrial environments is no small feat, but NV-Tesseract-AD employs curriculum learning to ensure stability and effectiveness during this process. This method starts with simpler tasks, using lightly corrupted data to establish foundational learning, before progressively introducing more complex challenges with higher noise levels and masking ratios. Such a structured, easy-to-hard progression prevents the model from becoming overwhelmed or collapsing under the pressure of unpredictable real-world inputs. In practical terms, this means the system can handle everything from subtle shifts in patient vitals to erratic spikes in cloud metrics without losing its footing. This deliberate training strategy marks a significant departure from erratic or one-size-fits-all approaches, offering a pathway to generalization that is critical for deployment across diverse sectors.

Enhancing Generalization for Unpredictable Scenarios

The strength of curriculum learning in NV-Tesseract-AD lies in its ability to prepare the model for the unexpected, ensuring it remains reliable even when faced with data it hasn’t explicitly encountered during training. By gradually increasing the complexity, the system learns to adapt to a wide range of conditions, from sudden anomalies to slow, creeping deviations that might otherwise go unnoticed. This adaptability proves essential in settings like aerospace, where telemetry data can shift dramatically between mission phases, or in healthcare, where patient conditions evolve unpredictably. The result is a model that doesn’t just memorize patterns but understands underlying dynamics, reducing the likelihood of errors when conditions change. This focus on robust generalization underscores NVIDIA’s commitment to creating a tool that thrives under the messy realities of industrial data, rather than faltering when theory meets practice.

Adaptive Thresholding with SCS and MACS

Tailoring Alerts with Segmented Confidence Sequences

One of the standout innovations in NV-Tesseract-AD is its use of Segmented Confidence Sequences (SCS), a patent-pending method that revolutionizes how anomalies are flagged by moving away from static thresholds. SCS divides data into locally stable regimes, applying tailored confidence bounds to each segment to ensure alerts are contextually relevant. This means that in environments with shifting baselines—such as a spacecraft transitioning between operational modes—the system adjusts its sensitivity to avoid flagging normal variations as problems. By focusing on localized stability, SCS minimizes false positives, a common issue that erodes trust in detection systems. This dynamic approach ensures that operators receive only meaningful notifications, enhancing decision-making in high-pressure scenarios where clarity is paramount and every alert must count.

Multi-Scale Analysis for Comprehensive Insight

Complementing SCS is Multi-Scale Adaptive Confidence Segments (MACS), another pioneering technique that analyzes data across various time scales—short, medium, and long—using attention mechanisms to balance sensitivity and control over false alarms. This multi-scale perspective allows NV-Tesseract-AD to detect both sudden spikes, such as an API error burst in cloud operations, and gradual trends, like a memory leak developing over weeks. By considering different temporal lenses, MACS ensures no anomaly slips through due to mismatched timing, a frequent pitfall of rigid systems. Its unsupervised nature and interpretability further empower users to understand why an alert was triggered, fostering trust in critical applications. Together, SCS and MACS create a flexible, responsive framework that adapts to the unique rhythms of each dataset, setting a new standard for precision in anomaly detection.

Real-World Impact Across Industries

Transforming Operations with Precision

Healthcare: Cutting False Alarms

In the high-stakes environment of intensive care units, where false alarms from monitoring equipment can overwhelm medical staff, NV-Tesseract-AD offers a transformative solution by learning patient-specific baselines. Rather than triggering alerts for every minor fluctuation in vital signs, the model dynamically adjusts thresholds to focus on genuine anomalies, such as an unexpected drop in heart rate that could signal distress. This reduction in irrelevant notifications allows clinicians to concentrate on critical interventions without the constant distraction of non-issues. The impact is profound, as alarm fatigue—a well-documented problem in healthcare—diminishes, enabling better patient outcomes through focused, timely responses. NV-Tesseract-AD’s ability to personalize detection in this way demonstrates its potential to enhance care quality in settings where every second matters.

Prioritizing Clinician Focus in Hospitals

Beyond merely reducing alerts, NV-Tesseract-AD’s application in healthcare reshapes the daily experience of medical professionals by fostering a more manageable workflow. Hospitals often grapple with systems that err on the side of caution, flooding staff with warnings that dilute attention to real emergencies. By leveraging its adaptive techniques, this model filters out noise while maintaining sensitivity to true threats, ensuring that a subtle but dangerous change in a patient’s condition doesn’t go unnoticed. This balance of precision and restraint not only improves operational efficiency but also supports staff well-being by reducing stress from constant interruptions. As healthcare systems increasingly rely on data-driven tools, NV-Tesseract-AD stands out as a partner that aligns with clinical priorities, paving the way for smarter, more humane monitoring practices.

Aerospace: Clarity in Complexity

Distinguishing Threats from Routine Shifts

Spacecraft operations generate thousands of data channels, each with potential fluctuations that could signal disaster or simply reflect normal mission phases, creating a daunting task for anomaly detection. NV-Tesseract-AD excels in this arena by distinguishing between expected regime shifts—such as a spacecraft adjusting modes—and true anomalies, like an unusual torque variation in a rover’s wheel that might indicate mechanical failure. This contextual awareness prevents teams from wasting resources on false positives while ensuring critical issues are flagged promptly. In an industry where a missed signal could jeopardize an entire mission, the model’s ability to provide clarity amidst complexity offers a vital safeguard, enabling engineers to act decisively on real threats without second-guessing every alert.

Safeguarding Missions with Precision

The precision of NV-Tesseract-AD in aerospace extends beyond differentiation to enhance overall mission reliability by integrating seamlessly into control center workflows. Telemetry data is notoriously intricate, with variables interacting in unpredictable ways across vast distances and harsh conditions. The model’s multi-scale analysis ensures that both immediate anomalies and longer-term trends are captured, providing a comprehensive view that supports preemptive maintenance or emergency responses. For instance, identifying a gradual power drain before it becomes critical can save a mission from failure. This forward-thinking approach aligns with the aerospace sector’s need for tools that not only react to problems but anticipate them, reinforcing safety and success in one of the most unforgiving environments for technology.

Cloud Operations: Managing the Flood

Streamlining Alerts in Vast Systems

Cloud operations involve monitoring an overwhelming volume of metrics, from server performance to API error rates, often leaving operators buried under a deluge of alerts that obscure genuine issues. NV-Tesseract-AD cuts through this flood by intelligently identifying critical anomalies—such as a sudden burst of errors or a creeping memory leak—without inundating teams with irrelevant notifications. This targeted approach ensures that incident response times improve dramatically, as staff can focus on problems that truly impact system stability. In an era where downtime translates to significant financial and reputational loss, the model’s capacity to streamline alerts into actionable insights offers a competitive edge, keeping digital infrastructures running smoothly under intense demand.

Enhancing Scalability for Digital Giants

Scalability is another area where NV-Tesseract-AD proves its worth in cloud environments, adapting to the sheer magnitude of data generated by modern digital ecosystems without sacrificing accuracy. As cloud platforms expand, handling thousands of simultaneous metrics becomes a logistical nightmare for static detection systems, often leading to missed signals or alert fatigue. This model’s adaptive thresholding and multi-scale analysis allow it to maintain performance even as data volumes grow, ensuring that both abrupt failures and subtle degradations are caught early. This capability supports cloud providers in maintaining service quality for millions of users, from streaming platforms to enterprise solutions. By aligning with the scalability needs of the digital age, NV-Tesseract-AD positions itself as an indispensable tool for managing the backbone of today’s interconnected world.

Performance and Future Potential

Proven Results and What’s Next

Testing Success on Tough Datasets

When rigorously evaluated on notoriously noisy public datasets like Genesis and Calit2, NV-Tesseract-AD 2.0 demonstrated a marked improvement over its initial version, solidifying its reputation as a reliable anomaly detection tool. The updated model excelled at separating meaningful outliers from background clutter, a task that earlier iterations often bungled by either overreacting to noise or missing critical events. This enhanced performance stems from the integration of diffusion modeling and adaptive thresholding, which together provide a robust filter for distinguishing true anomalies in chaotic data environments. Such reliability is paramount in industries where trust in a system’s output can mean the difference between a timely intervention and a costly oversight, affirming the model’s readiness for mission-critical applications across diverse sectors.

Building Confidence Through Validation

The success on challenging datasets is more than a technical milestone for NV-Tesseract-AD; it represents a foundation of confidence for industries hesitant to adopt AI-driven solutions due to past inconsistencies. Detailed testing revealed not only improved accuracy but also a reduction in false positives, a critical factor for operators who rely on alerts to make high-stakes decisions. For example, in settings where a false alarm could divert resources from a real emergency, this precision builds user trust. The model’s ability to consistently perform under adverse conditions—where noise and sparsity dominate—suggests it can handle the unpredictable nature of real-world data with finesse. This validation phase underscores NVIDIA’s focus on delivering a tool that meets rigorous standards, ensuring it can be deployed with assurance in environments where errors are not an option.

Looking Ahead with Collaboration

Refinement Through User Engagement

Looking to the future, NVIDIA has positioned NV-Tesseract-AD for ongoing refinement by introducing a customer preview and evaluation license, allowing industries to test and integrate the model into their specific workflows. This hands-on approach enables users to provide direct feedback on performance in real-world scenarios, from manufacturing floors to hospital wards, ensuring that subsequent iterations address practical pain points. By opening the system to diverse testing environments, the development process becomes a collaborative effort, aligning the technology with the nuanced demands of different sectors. This strategy reflects a commitment to creating a solution that evolves based on actual user experiences, rather than remaining confined to theoretical improvements, fostering a tool that truly resonates with operational needs.

Industry Partnerships Shaping the Path Forward

Further amplifying this collaborative spirit, events like SEMICON West provide a platform for NVIDIA to engage directly with industry stakeholders, particularly in fields like manufacturing where time-series data drives predictive maintenance. These interactions offer opportunities to showcase NV-Tesseract-AD’s capabilities while gathering insights on sector-specific challenges, such as detecting anomalies in semiconductor production lines. Such partnerships are crucial for tailoring the model to unique industrial contexts, ensuring it remains versatile yet precise. As broader evaluations continue, the emphasis on co-development with users signals a forward-thinking approach, aiming to refine the technology into a cornerstone of next-generation anomaly detection. This focus on dialogue and adaptation hints at a future where the model not only meets current demands but also anticipates emerging needs in an increasingly data-driven world.