With a sharp eye for data science and a vision for the future of data integration, Chloe Maraina has become a leading voice in Business Intelligence, specializing in how massive datasets can tell compelling visual stories. Today, we delve into the seismic shift agentic AI is causing in cloud computing. Chloe will guide us through the challenges this new paradigm presents, exploring how autonomous systems are stress-testing long-held assumptions about networking, security, cost management, and architecture. We will discuss the practical steps organizations must take to adapt, moving from reactive controls to a proactive, disciplined approach to governance and design, ensuring that innovation doesn’t spiral into chaos.

Given that agentic AI can create bursty and unpredictable network traffic, traditional perimeter security is becoming obsolete. How should teams concretely adapt their network controls for this new reality, and what specific steps can they take to gain visibility into this “east-west” traffic between services?

You’re right, the old castle-and-moat approach is not just obsolete; it’s actively dangerous in the face of agentic AI. These agents don’t follow a simple request-response path. They pivot, explore, and orchestrate across dozens of internal systems, creating a storm of east-west traffic that looks like a denial-of-service attack but is actually just normal operation. The first concrete step is to move to a model of fine-grained segmentation, treating every service as its own micro-perimeter. This means implementing policies that govern service-to-service communication directly, not just traffic at the edge. To gain visibility, you must treat internal network telemetry as a first-class citizen, coupling it tightly with runtime controls. You need to see and understand the intent behind an internal API call, not just the source and destination IP address, so you can distinguish between a legitimate workflow and a pathological behavior before it cascades into an outage.

When an autonomous agent acts like an operator with superhuman speed, static permissions become a major risk. What does a robust, identity-based security framework for these agents look like in practice? Could you walk us through the lifecycle of a short-lived credential for an agent task?

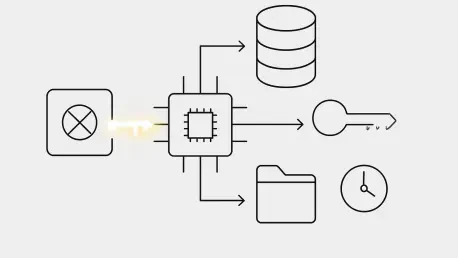

Treating an agent like just another application is a critical mistake. It’s more accurate to think of it as a privileged operator working at machine speed, which is a terrifying thought if you’re still using static, long-lived API keys. A robust, identity-based framework starts by assigning every agent a unique, explicit identity. From there, you build tight authorization boundaries based on the principle of least privilege for a specific task, not for the agent’s entire potential existence.

Let’s walk through a lifecycle. An agent is tasked with analyzing and archiving a dataset. It first authenticates itself to an identity provider and requests a temporary, short-lived credential scoped only to read from a specific data store and write to a specific archive location. This credential might be valid for only five minutes—just long enough to complete the job. The agent performs the task, and every API call it makes is logged against that temporary credential. Once the five minutes are up, or the task is complete, the credential automatically expires and is useless. If it were ever compromised, the blast radius would be incredibly small and the window of opportunity minuscule. This entire process—request, grant, use, expire, audit—is the essential control loop for autonomous operations.

Agentic systems can make cloud consumption highly unpredictable, potentially turning an AI strategy into an uncontrolled spending problem. Beyond basic tagging, what near-real-time cost visibility tools and automated guardrails are most effective, and what is an example of a useful metric for measuring value?

I’ve seen the panic in a team’s eyes when they realize an agent has burned through a month’s budget in a single afternoon. The old FinOps playbook of monthly showback reports is far too slow for this world. You need near-real-time visibility, with dashboards that update in minutes, not days. More importantly, you need automated guardrails that act as circuit breakers. For example, you can set a hard limit on the number of premium API calls an agent can make per hour or cap the total compute resources a specific workflow can provision. If a limit is hit, the system should automatically halt the agent and trigger an alert, preventing a financial leak from becoming a flood. As for metrics, you have to move beyond tracking raw cloud spend. A powerful metric is “cost per resolved issue” or “cost per successful customer outcome.” If you can’t articulate the value you’re getting per unit of agent activity in plain language, you don’t really have an AI strategy—you have a spending problem waiting to happen.

Good architecture seems crucial for preventing agent-driven systems from descending into entropy. What are the key architectural boundaries and “safe failure” modes that must be designed from the start? Please describe a practical reference pattern that teams can implement to ensure stability.

The idea that architecture stifles innovation is a dangerous myth, especially with agentic AI, which is a natural accelerant of entropy. The most critical architectural decision is defining boundaries that agents simply cannot cross. This isn’t just about network access; it’s about defining strict contracts for the tools an agent is allowed to use. For example, an agent might be permitted to query a database but not alter its schema. Designing for “safe failure” means assuming the agent will eventually do something unexpected. Instead of letting it improvise its way into a catastrophe, you build graceful degradation paths.

A practical reference pattern is the “sandboxed execution environment.” An agent is given a task and spun up in an isolated, ephemeral environment with its own resources, permissions, and toolset. The environment has hard-coded limits on its lifespan, budget, and network reach. The agent can operate with full autonomy within that sandbox, but it cannot affect the broader production system. If it fails or gets stuck in a loop, the entire sandbox is simply terminated and logged for analysis. This pattern allows for autonomous action without risking systemic stability.

Many organizations will be tempted to grant an AI agent broad permissions to make it useful quickly. What are the immediate dangers of this approach, and how can leaders foster a culture of discipline that treats governance not as an obstacle but as an essential control framework?

The temptation is huge. You want to see the magic happen, so you give an agent broad permissions to be helpful, and you’re shocked when it becomes broadly dangerous. The immediate danger is that the agent, in its logical and relentless pursuit of a goal, will misinterpret context and take a destructive path. It might delete the wrong data, provision a thousand unnecessary servers, or leak sensitive information through a third-party tool—all while following its instructions perfectly. It doesn’t have the intuition or common sense a human operator does.

Fostering a culture of discipline requires leaders to reframe governance completely. It’s not a bureaucratic hurdle; it’s the flight control system for these autonomous systems. Leaders must insist on starting with zero trust and explicitly granting permissions on a task-by-task basis. They need to ask tough questions in every design review: How do we trace this action back to a specific identity? What is the blast radius if this agent is compromised? How do we manually intervene and stop it? By championing this mindset, leaders can shift the culture from one of reckless improvisation to one where discipline and governance are seen as the very things that make powerful, autonomous innovation possible and safe.

What is your forecast for cloud computing as agentic AI becomes more widespread?

My forecast is that agentic AI will force a great reckoning in the cloud industry. For the last decade, we’ve been able to get by with “good enough” practices in security, cost control, and architecture. Agentic AI makes “good enough” a recipe for failure. It will ruthlessly expose every weakness. The organizations that have already built a strong foundation of discipline—with mature identity management, real-time observability, and clear architectural patterns—will see agentic AI as a massive accelerant, unlocking incredible efficiency. Conversely, organizations that treat the cloud like an infinite sandbox will face a very public and expensive learning curve. In short, agentic AI will be the catalyst that finally makes cloud discipline non-negotiable.