The fastest-growing apps set expectations that stretched old cloud assumptions until they snapped, because users now expect sub‑100ms responses, instant personalization, and no‑drama resilience whether they are in Seoul, São Paulo, or a stadium with flaky coverage during a live event. The center of gravity has shifted from warehoused data to the living edge where customers actually interact. That change recasts architecture decisions: instead of hauling every interaction to a distant region, platforms increasingly push compute and AI inference to wherever the demand appears, then synchronize state in the background. The aim is not decentralization as an ideology; it is proximity as a service promise. When an invoice check, an inventory call, or a fraud screen happens near the user, applications feel instantaneous, core systems stay calm, and failure domains shrink to the smallest reasonable unit.

Why proximity now outpulls data

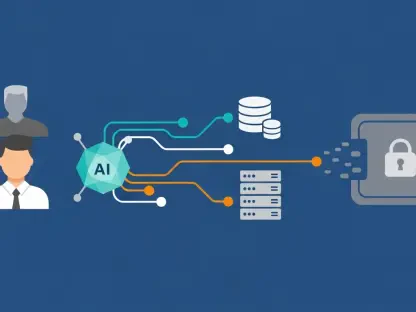

Data gravity guided the first cloud era by pulling applications toward large, centralized stores where analytics, storage, and governance lived. However, mobile behavior, omnichannel commerce, and AI‑driven experiences changed the bottleneck. Latency, not capacity, became the rate limiter for growth, and the difference between a 40ms and 180ms round trip now sets the ceiling for conversion and retention. Availability zones helped in an age of monoliths, yet routing global demand through a handful of regions adds avoidable distance and concentrates risk. Many cloud‑native services already replicate data and tolerate component failure, making resilience a function of distribution and adaptiveness rather than clustering. In this environment, customer interactions anchor the design, and databases follow the user, not the other way around.

Edge‑native platforms embody that inversion by executing time‑sensitive functions close to users while relegating heavy lifts to central systems only when needed. A checkout flow can screen fraud at the edge using a slim model, confirm inventory against a regional cache, and commit orders asynchronously to the system of record. Real‑time inference for recommendations or pricing can run in containers deployed by geography, device, or demand, with models refreshed continuously as behavior shifts. This pattern reduces pressure on core databases, lowers tail latency, and isolates failures to local domains. Critically, it also reframes scale: more locations are not a problem to be tamed but a canvas to be orchestrated, where capacity grows with audience distribution rather than with a single region’s limits.

From static regions to adaptive orchestration

The hard problem no longer sits in the count of sites but in the dynamism of connections among them. Static routing and manual region selection routinely misplace work, such as an edge in Dallas serving a user in Bogotá while a closer site idles. Modern infrastructure should route workloads—not just traffic—using live signals: proximity, packet loss, time to first byte, device constraints, model warm state, and even content sensitivities. That requires a control plane that understands both application topology and network conditions in real time. Developers should express intent—latency budgets, consistency levels, model variants—and let the platform place containers, hydrate caches, and promote results to the core as conditions change. Regions and zones become implementation details, not choices exposed in dashboards.

The path forward was clear: teams prioritized customer gravity by operationalizing proximity, adaptability, and context‑aware execution. They instrumented user‑centric metrics like p95 end‑to‑end latency, inference cold‑start rates, and regional error budgets, then bound deployments to those objectives. They containerized models and functions to run at the edge, promoted write‑through patterns with conflict resolution, and adopted auto‑placement to move work toward better network health without manual intervention. Governance traveled with the workload through policy as code, while CI/CD pipelines shipped model and function updates alongside routing hints. Success hinged on treating distribution as a means to outcomes—personalization, responsiveness, and practical resilience—so product experience improved even as core systems ran quieter and incident blast radii stayed small.