In an era where artificial intelligence and data-driven decision-making dominate enterprise landscapes, the demand for efficient vector search systems has skyrocketed, particularly in multi-tenant environments where diverse workloads and performance needs collide. Imagine a bustling digital marketplace where thousands of tenants—ranging from small startups to large corporations—rely on a single platform to retrieve critical insights through vector search. The challenge lies in ensuring each tenant experiences seamless performance without overwhelming the system or burdening administrators with complex management tasks. Qdrant, a leading open-source vector search engine, has stepped up to address this challenge with the introduction of Tiered Multitenancy in its latest release. This innovative feature promises to redefine how organizations scale and optimize multi-tenant systems, delivering a balance of simplicity and high performance that is poised to set a new standard in the industry.

Revolutionizing Scalability in Vector Search

Balancing Tenant Needs with Seamless Scaling

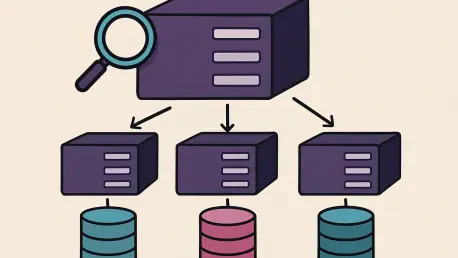

Tiered Multitenancy emerges as a game-changer for organizations managing multiple tenants within a vector search framework. This feature allows all tenants to reside in a shared collection, simplifying the architecture while offering the flexibility to elevate high-traffic or latency-sensitive tenants to dedicated shards. The beauty of this approach lies in its seamlessness—promoting a tenant to a dedicated resource requires no downtime, reindexing, or changes to client applications. By maintaining both shared and dedicated paths within the same collection, the system ensures that operational complexity remains low while delivering predictable performance for critical workloads. This capability is particularly valuable for enterprises dealing with varied tenant demands, as it eliminates the need for cumbersome multi-index setups that often plague other solutions in the market, paving the way for a more unified and efficient search environment.

Streamlining Operations Through Smart Resource Allocation

Beyond scalability, the operational simplicity of Tiered Multitenancy stands out as a significant advantage for technical teams. Managing multiple indexes for different tenants can quickly become a logistical nightmare, but this feature consolidates everything into a single collection, reducing administrative overhead. Tenants start in a shared fallback shard, and when performance demands escalate, a single API call triggers a filtered streaming transfer to a dedicated shard. Throughout this process, consistency remains intact, with reads and writes automatically routed to the appropriate shard without requiring complex client-side logic. This streamlined approach not only saves time but also minimizes the risk of errors, allowing teams to focus on innovation rather than maintenance. For organizations scaling AI-driven applications, such a system offers a robust foundation to handle growth without sacrificing efficiency or user experience.

Enhancing Performance for Hybrid Workloads

Supporting Diverse Applications with Flexible Isolation

One of the standout benefits of Qdrant’s latest innovation is its ability to handle hybrid workloads with ease, catering to applications that require both tenant-specific isolation and global search capabilities. In multi-tenant environments like Retrieval-Augmented Generation (RAG) platforms or enterprise copilots, performance profiles can vary drastically between clients. Tiered Multitenancy addresses this by enabling teams to isolate larger clients or those with stringent latency requirements while maintaining a global index for cross-tenant data retrieval. The architecture combines payload-based filtering with custom sharding, ensuring that resources are allocated selectively to meet specific needs without disrupting the broader system. This flexibility is a critical asset for modern AI applications, where balancing localized performance with overarching accessibility often poses a significant challenge to developers and system architects.

Driving Efficiency in AI-Driven Environments

The broader implications of this feature extend to the efficiency it brings to AI-native environments, where diverse customer demands must be met without inflating operational costs. By consolidating multi-index architectures into a single collection, Tiered Multitenancy reduces the complexity of managing resources across varied workloads. Teams can allocate compute power to high-priority tenants while keeping smaller or less demanding ones in shared shards, optimizing resource usage. This targeted approach ensures that performance remains high for critical applications without over-provisioning resources for others, striking a balance that is both cost-effective and scalable. Furthermore, the ability to conduct global searches across tenants without sacrificing isolation empowers organizations to build more cohesive and responsive systems, aligning with the growing trend of adaptable solutions in vector search technology and setting a precedent for future advancements.

Reflecting on a Milestone in Vector Search Innovation

Looking back, Qdrant’s rollout of Tiered Multitenancy marked a pivotal moment in addressing the intricate challenges of scalability and performance in multi-tenant vector search systems. This feature not only tackled the pain points of tenant isolation and workload management but also set a benchmark for operational simplicity in a field often bogged down by complexity. For organizations navigating the demands of AI-driven applications, the introduction of seamless tenant promotion and hybrid workload support provided a practical framework to build upon. Moving forward, the focus should shift to leveraging such innovations to further refine resource allocation strategies and explore integrations with emerging AI technologies. As the industry continues to evolve, adopting solutions that prioritize both efficiency and adaptability will be key to staying ahead, ensuring that vector search systems remain robust and responsive to ever-changing enterprise needs.