In the ever-evolving landscape of artificial intelligence and machine learning, the need for efficient and integrated tools has never been more critical, particularly for businesses aiming to harness the power of their data assets. AWS has recently launched new services designed to enhance AI data pipelines, making use of its SageMaker and S3 Tables tools, which are now generally available as part of the SageMaker Unified Studio initiative. This innovation provides a comprehensive development environment integrating multiple AWS services, including those across data analytics and AI/ML platforms such as the Lakehouse platform and SageMaker Catalog. The goal is to facilitate the end-to-end processes involved in tasks such as SQL analytics, data preparation, model building, and generative AI application development, ultimately streamlining the journey from raw data to actionable insights.

Advanced Model Integration

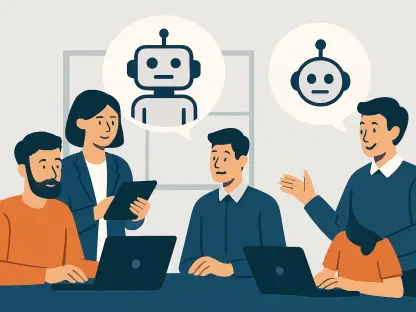

The recent update to SageMaker introduces cutting-edge models like Claude 3.7 and Deepseek R1, bringing significant advancements in latency-sensitive inferencing for specialized models developed by Anthropic, Meta, and Amazon. These advancements represent a meaningful leap in AI capabilities, offering faster processing times and more refined inferencing for applications demanding real-time or near-real-time responses. Furthermore, AWS has simplified the prototyping and sharing of applications across team members through its Bedrock service, ensuring that collaborative efforts in AI development are smoother and more efficient. By integrating these high-performance models, AWS ensures that users can leverage the latest innovations in AI research and development without the complexity typically associated with deploying and managing such models.

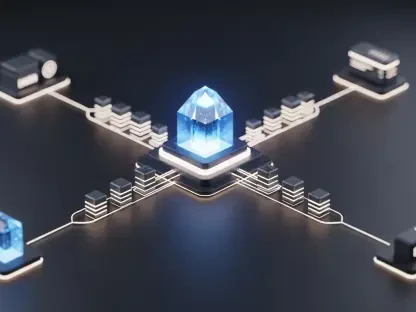

Another notable feature is the improved access to S3 Tables within the SageMaker Lakehouse environment. This functionality enables users to execute SQL queries, Spark jobs, model building, and generative AI applications by seamlessly combining data from S3 Tables with other data sources within the Lakehouse. This integration includes Redshift and federated data sources, allowing for a more cohesive and comprehensive data environment. The ability to work with diverse data sources without the need to transfer data between storage locations reduces both data duplication and associated costs, making the process more efficient while maintaining stringent governance and compliance standards. This streamlined access to multiple data sources ensures that AI projects can proceed unhindered by typical data movement and management bottlenecks, enhancing overall productivity and data integrity.

Enhancing Data Governance and Integration

A clear focus of AWS’s recent upgrades is on breaking down data silos, reflecting a broader trend where businesses recognize the imperative to integrate their data for faster AI development. The discipline in data management and the assurance that AI projects use trusted and responsibly governed data sources cannot be overstated. In an era where data governance and compliance are paramount, AWS’s enhancements promise to uphold these standards while delivering high-performance solutions. The integration of SageMaker Catalog within the Unified Studio exemplifies this by offering robust cataloging, discovery, and governance features that ensure data used in AI/ML workflows is well-documented and controlled. This approach not only boosts confidence in the reliability and accuracy of AI models but also ensures that data lineage and provenance are meticulously tracked.

Moreover, the new capabilities to coordinate and leverage data across the Lakehouse platform further signify AWS’s commitment to comprehensive data strategies. Organizations can now bring together disparate data sets from various sources without compromising on governance or regulatory compliance. This broad-spectrum data integration is crucial for developing more accurate and reliable AI models since it ensures that all relevant data is considered and appropriately managed. These improvements collectively illustrate a shift towards more disciplined and integrated data practices within the AI development landscape. By enhancing data governance and integration, AWS is enabling businesses to build more robust, trustworthy, and effective AI solutions.

Foundations for Future AI Innovations

The latest SageMaker update introduces advanced models like Claude 3.7 and Deepseek R1, significantly enhancing latency-sensitive inferencing for specialized models by Anthropic, Meta, and Amazon. These improvements mark a significant breakthrough in AI capabilities, providing faster processing and refined inferencing for applications that demand real-time or near-real-time responses. Additionally, AWS has streamlined prototyping and app sharing among team members through its Bedrock service, fostering more efficient and collaborative AI development efforts. By incorporating these high-performance models, AWS ensures users can utilize the newest innovations in AI research and development without the typical complexities of deployment and management.

A notable addition is the enhanced access to S3 Tables within the SageMaker Lakehouse environment. This feature allows users to execute SQL queries, Spark jobs, model building, and generative AI applications by integrating data from S3 Tables with other sources in the Lakehouse, including Redshift and federated data sources. This cohesion eliminates the need to transfer data between storage sites, reducing data duplication and associated costs. It enhances efficiency while maintaining strict governance and compliance standards, ensuring AI projects move smoothly, boosting productivity and data integrity.