In an era where machine learning models drive critical decisions in industries ranging from healthcare to automotive design, understanding how these models arrive at their predictions has become a pressing challenge for data scientists and stakeholders alike. Often perceived as black boxes, these complex systems can obscure the reasoning behind their outputs, making trust and accountability difficult to establish. Enter SHAP-IQ visualizations, a powerful set of tools designed to demystify machine learning by breaking down predictions into clear, interpretable components. By revealing both individual feature contributions and intricate interactions between variables, SHAP-IQ offers a window into the decision-making process of algorithms. This article delves into the mechanics of these visualizations, guiding readers through a step-by-step exploration of their application using a practical dataset. From setup to insightful visual outputs, the focus is on how such tools enhance transparency and foster a deeper comprehension of model behavior in real-world scenarios.

1. Unlocking Model Insights with SHAP-IQ

SHAP-IQ visualizations serve as a critical bridge between the opaque workings of machine learning models and the need for human-understandable explanations, making complex data accessible to a wider audience. These tools are rooted in Shapley values, a concept from game theory that fairly attributes contributions to individual players—or in this case, features—within a collaborative system. What sets SHAP-IQ apart is its ability to extend beyond solo contributions and capture interactions between features, offering a nuanced view of how variables collectively influence predictions. For instance, in a model predicting fuel efficiency, SHAP-IQ can reveal not just the impact of a car’s weight but also how weight interacts with horsepower to shape the outcome. This dual focus on individual and joint effects makes it an invaluable asset for data scientists aiming to interpret complex models. By presenting these insights visually, SHAP-IQ transforms abstract numbers into actionable understanding, enabling better decision-making and model refinement in diverse applications.

The significance of SHAP-IQ lies in its adaptability to various model types and datasets, ensuring that explanations remain accessible regardless of the underlying algorithm. Whether dealing with regression tasks or classification challenges, these visualizations provide a consistent framework for dissecting predictions. A practical example involves the MPG (Miles Per Gallon) dataset, which includes car attributes like horsepower and weight to predict fuel efficiency. Using this dataset, SHAP-IQ can highlight which factors most strongly drive a specific prediction and whether their combined effects amplify or diminish the result. This tutorial-based approach, supported by comprehensive code examples, allows practitioners to replicate the process and apply it to their own projects. By focusing on transparency, SHAP-IQ empowers users to validate model behavior, identify potential biases, and communicate findings effectively to non-technical stakeholders who rely on these insights for strategic planning.

2. Preparing the Technical Environment

Setting up the necessary tools is the first step in leveraging SHAP-IQ for model interpretation, ensuring a smooth start to the process. Begin by installing the required libraries using the command !pip install shapiq overrides scikit-learn pandas numpy seaborn to ensure all dependencies are in place. Following installation, import essential components such as RandomForestRegressor for modeling, mean_squared_error and r2_score for evaluation, and train_test_split for data partitioning, alongside the core shapiq package. Verifying the installed version with print(f"shapiq version: {shapiq.__version__}") confirms compatibility and readiness for use. This initial setup is crucial as it establishes the foundation for all subsequent steps, from data handling to visualization generation. A stable environment minimizes errors and ensures that the focus remains on interpreting results rather than troubleshooting technical issues, paving the way for a seamless workflow.

Once the environment is configured, attention shifts to understanding the role of each library in the process, ensuring a smooth workflow for data analysis and model interpretation. The shapiq package is central to generating Shapley-based explanations, while scikit-learn provides robust tools for building and evaluating machine learning models. Libraries like pandas and numpy facilitate data manipulation, ensuring that datasets are structured appropriately for analysis. Meanwhile, seaborn enhances the visual appeal of outputs, though its primary use here is for loading sample data. This combination of tools creates a comprehensive ecosystem for exploring model behavior through SHAP-IQ. Ensuring that all components are correctly installed and accessible allows for efficient progression to data loading and processing, where the real work of interpretation begins. Proper setup is not just a preliminary task but a critical determinant of success in achieving meaningful insights from complex algorithms.

3. Accessing the MPG Dataset

The next phase involves loading a suitable dataset to apply SHAP-IQ visualizations effectively, and for this purpose, this tutorial utilizes the MPG (Miles Per Gallon) dataset, which is readily available through the Seaborn library. It contains detailed information on various car models, including attributes such as horsepower, weight, and origin. To access this data, execute the command df = sns.load_dataset("mpg") and inspect the structure to familiarize yourself with its contents. This dataset serves as an ideal testbed for exploring machine learning predictions due to its mix of numerical and categorical variables, reflecting real-world complexities. By starting with a well-documented and accessible dataset, the focus remains on understanding model behavior rather than grappling with data acquisition challenges, setting a solid foundation for subsequent analysis.

Understanding the dataset’s composition is essential for interpreting the results of SHAP-IQ visualizations accurately, and it sets the stage for reliable analysis. The MPG dataset includes variables that intuitively relate to fuel efficiency, making it easier to grasp how features influence predictions. For example, heavier vehicles might be expected to have lower miles per gallon, but the dataset also captures nuances like the car’s origin, which may introduce additional variability. Loading this data directly via Seaborn ensures consistency and eliminates the need for external file handling, streamlining the process. Once loaded, a quick inspection reveals the range of values and potential data quality issues that need addressing before modeling. This step is not merely about data retrieval but about ensuring that the foundation for analysis is robust, enabling meaningful explanations of how specific features drive the model’s output in the context of fuel efficiency predictions.

4. Cleaning and Encoding Data

Data preparation is a pivotal step before any modeling or visualization can occur, ensuring that the input is suitable for analysis. Start by addressing missing values in the MPG dataset with the command df = df.dropna(), which removes any rows with incomplete data to prevent errors during training. Following this, handle categorical variables such as the “origin” column by converting it into a numeric format using LabelEncoder() from scikit-learn. This encoding process assigns unique integers to each category, making the data compatible with machine learning algorithms. Display the mapping of original labels to their encoded values (e.g., via a print statement) to maintain transparency in how categories are represented. This cleaning and transformation ensure that the dataset is ready for splitting and modeling, minimizing potential biases or errors in the subsequent steps.

Beyond basic cleaning, understanding the impact of these preprocessing choices on SHAP-IQ explanations is crucial for achieving reliable results. Dropping missing values, while straightforward, reduces the dataset size, which could affect the model’s generalizability if significant patterns are lost. Similarly, encoding categorical variables like “origin” introduces a numerical representation that the model interprets as ordinal, potentially influencing feature importance calculations. By documenting the encoding mappings, it becomes easier to trace back how specific categories contribute to predictions when visualized through SHAP-IQ. This step is not just about technical readiness but about laying the groundwork for accurate and interpretable results. A well-prepared dataset ensures that the insights derived from visualizations reflect true model behavior rather than artifacts of poor data quality, enhancing the reliability of the analysis.

5. Splitting Data for Training and Testing

With the data cleaned and encoded, the next task is to partition it into training and testing subsets to facilitate model development and evaluation. Define the features (X) by excluding the target variable “mpg” and the non-informative “name” column, while setting the target (y) as the “mpg” values. Store the feature names for later use in explanations and convert the data into arrays for processing. Then, use train_test_split from scikit-learn to divide the data, allocating 20% to the test set and maintaining reproducibility with a fixed random state of 42. This split ensures that the model is trained on a substantial portion of data while reserving a separate subset for unbiased evaluation, a critical practice for assessing performance and generating meaningful SHAP-IQ visualizations based on test instances.

The importance of a well-balanced train-test split cannot be overstated in the context of model explanation, as it plays a critical role in ensuring the reliability of the evaluation process. A 20% test size provides enough data to evaluate the model without overly reducing the training set, which is essential for capturing the underlying patterns in the MPG dataset. The fixed random state guarantees that the split remains consistent across different runs, allowing for reliable comparisons of results. This step also sets the stage for selecting specific test instances for local explanations using SHAP-IQ, as the test set represents unseen data that mimics real-world application scenarios. By carefully partitioning the data, the foundation is laid for training a robust model whose predictions can be dissected through visualizations, ensuring that insights are both accurate and relevant to practical use cases where model transparency is paramount.

6. Building and Training the Model

Training a machine learning model is the cornerstone of this tutorial, as it provides the predictions that SHAP-IQ will later explain, setting the stage for deeper analysis. Initialize a RandomForestRegressor with specific parameters: a maximum depth of 10 to limit complexity, 10 estimators for a manageable ensemble, and a random state of 42 for reproducibility. Train this model on the prepared training data using the model.fit(x_train, y_train) command, allowing it to learn the relationships between car features and fuel efficiency in the MPG dataset. This step transforms raw data into a predictive tool, capturing both linear and non-linear patterns that influence outcomes. A Random Forest model is particularly suitable due to its ability to handle feature interactions inherently, which aligns well with SHAP-IQ’s focus on both individual and joint effects in explanations.

The choice of model parameters reflects a balance between accuracy and interpretability, which is crucial for producing meaningful visualization outputs in data analysis. Limiting the depth and number of trees prevents overfitting, ensuring that the model generalizes well to unseen data while remaining computationally efficient for SHAP-IQ calculations. Training on the MPG dataset, with its diverse features, allows the model to capture real-world complexities, such as how weight and horsepower might jointly affect fuel efficiency. Once trained, the model serves as the subject of analysis, where SHAP-IQ visualizations will dissect its decision-making process for specific predictions. This step is not merely about building a predictive tool but about creating a system whose internal logic can be transparently explored, providing a basis for trust and further optimization in practical applications.

7. Evaluating Model Accuracy

Assessing the performance of the trained Random Forest model is essential to ensure its predictions are reliable before delving into explanations. It is important to calculate key metrics such as Mean Squared Error (MSE) and R² Score using the test data with commands like mean_squared_error(y_test, model.predict(x_test)) and r2_score(y_test, model.predict(x_test)). Format and print these results to two decimal places for clarity, providing a quantitative measure of how well the model predicts fuel efficiency. A lower MSE indicates smaller prediction errors, while a higher R² Score suggests that the model explains a significant portion of the variance in the target variable. This evaluation step validates the model’s credibility, ensuring that SHAP-IQ visualizations are based on a system worth interpreting rather than one with poor predictive power.

Beyond raw metrics, understanding the implications of these results for SHAP-IQ analysis is equally important, especially when assessing the reliability of model explanations. A model with strong performance, as indicated by favorable MSE and R² values, inspires confidence in the explanations derived from it, as the predictions align closely with actual outcomes. Conversely, poor performance might suggest that explanations could reflect flawed logic or noise rather than meaningful patterns. In the context of the MPG dataset, these metrics provide insight into how effectively the model captures relationships between car features and fuel efficiency, setting expectations for the granularity of SHAP-IQ insights. Evaluation is not just a checkpoint but a critical filter that determines whether the subsequent visualizations will yield trustworthy and actionable information for stakeholders seeking to understand model behavior.

8. Focusing on a Single Prediction

To illustrate how SHAP-IQ visualizations work at a granular level, select a specific instance from the test set for detailed analysis. Choose instance ID 7 using x_explain = x_test[7], retrieve the true value (y_true = y_test[7]), and compute the predicted value with model.predict(x_explain.reshape(1, -1))[0]. Display both the true and predicted values alongside the feature values for this instance by iterating through the feature names and printing each corresponding value. This local focus allows for a deep dive into how the model arrived at a particular prediction for a single car in the MPG dataset, highlighting the specific contributions of attributes like weight or horsepower. Such an approach is invaluable for understanding individual outcomes, especially in high-stakes scenarios where specific predictions need justification.

The act of isolating a single prediction underscores the personalized nature of SHAP-IQ explanations, distinguishing local analysis from global overviews, and by examining instance ID 7, it becomes possible to trace how each feature value influences the predicted fuel efficiency, offering a microcosm of the model’s decision-making process. This step is particularly relevant for debugging or validating predictions in real-world applications, where understanding why a specific output was generated can inform corrective actions or build user trust. The detailed output of feature values provides a tangible link between raw data and model output, setting the stage for SHAP-IQ visualizations to break down these influences visually. Focusing on a single instance ensures that the complexity of machine learning is made relatable, grounding abstract concepts in a concrete example that can be systematically explored.

9. Exploring Interaction Levels in Explanations

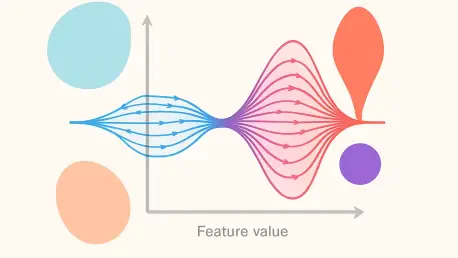

Generating explanations across different interaction orders is a key strength of SHAP-IQ, allowing for a comprehensive understanding of feature contributions in complex models. Compute Shapley-based explanations for three levels: Order 1 for individual feature effects (Standard Shapley Values), Order 2 for pairwise interactions, and Order N for full interactions across all features. Use shapiq.TreeExplainer with appropriate parameters (max_order and index) to explain the selected instance for each order, storing results in a dictionary for easy access. This process, applied to the test instance from the MPG dataset, reveals not only which features matter most individually but also how they collaborate or counteract each other in shaping the prediction. Such multi-level analysis is essential for capturing the full spectrum of model behavior.

The distinction between interaction orders offers a layered perspective on model complexity, enhancing the depth of insights and providing a comprehensive understanding of predictive processes. At Order 1, the focus is on standalone impacts, such as how a car’s weight directly affects fuel efficiency. Order 2 uncovers synergies, like the combined effect of weight and horsepower, while Order N considers the entire feature set’s interplay, providing a holistic view. This graduated approach ensures that no aspect of the prediction process is overlooked, catering to both simple and intricate analytical needs. By systematically generating these explanations, SHAP-IQ equips users to pinpoint critical drivers and subtle interactions within the model, fostering a richer interpretation. This step is not just about computation but about building a framework for visualization that translates raw calculations into meaningful narratives about how predictions are formed.

10. Visualizing Insights through Multiple Methods

SHAP-IQ offers a suite of visualization techniques to interpret model explanations, each providing unique perspectives on feature contributions and interactions. The Force Plot illustrates how features push predictions above or below a baseline, with red bars for positive influences and blue for negative, where bar length indicates impact magnitude. For instance, in the MPG dataset, a base value of 23.5 might be increased by features like Weight and Horsepower, while Model Year pulls it downward. The Waterfall Plot, similar in purpose, groups minor effects into an “other” category for clarity. These visualizations, generated for various interaction orders using commands like sv.plot_force(feature_names=feature_names, show=True), make complex data accessible, highlighting key drivers behind a specific fuel efficiency prediction with intuitive visual cues.

Further enriching the analysis, the Network Plot displays first- and second-order interactions, with node sizes reflecting individual impacts and edge properties indicating interaction strength and direction, making it ideal for complex models. The SI Graph Plot extends this by showing higher-order interactions via hyper-edges, offering a comprehensive view of joint influences. Lastly, the Bar Plot focuses on global explanations, summarizing average feature importance across instances, revealing that “Distance” and “Horsepower” are dominant, with interactions like “Horsepower × Weight” showing significant joint effects (attribution around 1.4). These visualizations, supported by code for multiple instances, transform abstract Shapley values into actionable insights. By presenting data through diverse lenses, SHAP-IQ ensures that both local and global model behaviors are thoroughly understood, catering to varied analytical needs.

11. Reflecting on the Power of Transparency

Looking back, the journey through SHAP-IQ visualizations demonstrated a robust approach to unraveling the intricacies of machine learning predictions. Each step, from setting up the environment to training a Random Forest model on the MPG dataset, built a foundation for dissecting how features shaped specific outcomes. The process of generating explanations across interaction orders revealed the depth of model behavior, while diverse visualization methods provided clarity on both individual and collaborative effects. This structured exploration underscored the importance of transparency in validating model logic and ensuring accountability in decision-making processes, particularly in domains where understanding the rationale behind predictions was paramount.

Moving forward, the insights gained from SHAP-IQ visualizations have paved the way for actionable next steps in model refinement and application, allowing practitioners to make informed decisions. They can leverage these tools to identify and mitigate biases, optimize feature selection, or communicate findings to stakeholders with greater confidence. As machine learning continues to evolve, integrating such interpretability frameworks into standard workflows will be essential for fostering trust and driving innovation. Exploring additional datasets or model types with SHAP-IQ could further expand its utility, ensuring that transparency remains a cornerstone of technological advancement in predictive analytics.