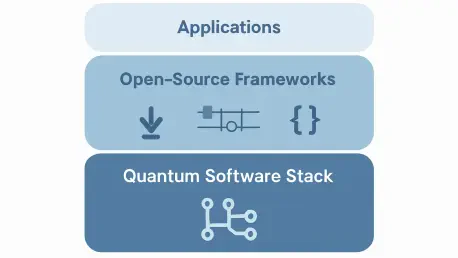

Across disciplines that prize computational power, a striking shift has been unfolding as programmers test-drive algorithms not on pristine theory but on noisy quantum hardware and precise simulators that demand practical abstractions to turn quantum promise into working code without hiding the physics that still dictates performance boundaries in the near term. Quantum computing replaces bits with qubits that can exist in superposition and become entangled, offering speedups for particular classes of problems in chemistry, optimization, and machine learning. The catch is that programming at the level of physical devices is unforgiving: noise, decoherence, and device-specific quirks make even small circuits difficult to run reliably. Open-source frameworks step into that chaos with structured layers—circuit builders, simulators, compilers, algorithm libraries, and cloud access—so developers can explore ideas, benchmark trade-offs, and move from theory to executable workflows without reinventing the stack.

Why Open-Source Frameworks Matter

The value of these frameworks is best understood by the gap they bridge. On one side, there are qubits with limited coherence and unique gate sets; on the other, there are users trying to model molecules, improve logistics, or study learning systems. Toolkits provide standardized ways to describe circuits, define cost functions, call simulators with realistic noise, and submit jobs to actual devices. Because they are open, the libraries embody a shared understanding of what works now, not merely what might work on fault-tolerant machines in the distant future. The result is a faster cycle from idea to experiment, with code that others can read, reuse, and scrutinize.

Moreover, openness changes who gets to participate. A graduate student can run a variational algorithm on a laptop simulator, then schedule time on a cloud-connected processor without lining up capital for lab hardware. A startup can test an optimization workflow on an annealer this week and a gate-model device next week by swapping backends and adjusting formulations. Even established firms gain from well-documented interfaces that cut down integration risk. Instead of one-off prototypes, there is an ecosystem shaping shared practices—naming conventions, transpilation strategies, error mitigation patterns—that is steadily normalizing how quantum software is written and evaluated across domains.

The Big Six at a Glance

Several frameworks now anchor the quantum software landscape, each carrying a distinct philosophy while coexisting in practice. Qiskit offers a broad, modular stack centered on circuit construction, simulation, and transpilation tightly coupled with cloud access to IBM devices. Cirq leans into the realities of today’s hardware, making device topology, calibration data, and explicit noise models first-class citizens. PennyLane turns hybrid learning into an everyday workflow by treating quantum nodes as differentiable parts of larger neural networks. Rigetti’s Forest SDK, with pyQuil and quilc, orients development around Quil and fast compilation for superconducting qubits managed through cloud services.

Ocean SDK stands apart because it targets quantum annealing rather than universal gate operations, guiding users to express problems as QUBO or Ising models. ProjectQ rounds out the set as a lightweight, extensible environment favored for education and rapid prototyping. Together, these tools draw a practical map of choices. Some lean general-purpose, others emphasize a specific hardware stack or methodology. Yet they intersect in a useful way: code written for a particular goal—say, variational chemistry or scheduling optimization—can often be ported or compared across frameworks, giving teams freedom to explore without committing to a single path too early. The stack has matured to the point where “try before you standardize” is a workable strategy.

Framework Deep Dives

Qiskit’s modular design is central to its staying power. Terra provides circuit primitives, passes for optimization, and transpilers that map logical circuits to target devices, while Aer delivers high-performance simulation that can emulate noise and device constraints. IBM’s cloud ties it together with queues, backend metadata, and calibration-aware routing, so algorithms are not only portable but also sensitive to real hardware conditions. This blend of abstraction and device intimacy makes Qiskit a dependable choice for education, chemistry studies using variational eigensolvers, early-stage optimization pilots, and explorations in quantum-enhanced machine learning. Its community depth translates into tutorials, benchmarks, and reusable patterns that feel production-adjacent even when experiments are small.

Cirq takes a complementary route by foregrounding NISQ behavior. Circuits are constructed with explicit reference to device topology, and noise can be introduced at the gate, channel, or measurement level to match observed performance. This enables careful fidelity studies, error-mitigation techniques, and calibration-informed experiments that measure algorithmic fragility under realistic conditions. Researchers often prefer Cirq for benchmarking and for exploring error correction prototypes, where small design choices ripple into measurable outcomes. Its Pythonic surface stays approachable, but the emphasis is on rigorous modeling: instead of assuming ideal devices, Cirq pushes users to think like experimentalists who expect drift, cross-talk, and constraints that shape what is possible today.

Common Themes Shaping the Stack

Hybrid quantum-classical workflows have become the lingua franca of near-term progress. Variational circuits parameterized by classical optimizers dominate algorithm design because they keep circuits shallow and adaptable to noise. Differentiable programming binds quantum models to established machine learning tools, while cloud orchestration ensures experiments scale beyond a single workstation. Within this pattern, frameworks compete less on ideology and more on execution details: how good the simulators are, how rich the error models become, how smooth the hand-off is between classical code and quantum kernels, and how transparent the device metadata remains when compiling.

Interoperability has shifted from a nice-to-have to an expectation. Plugins allow PennyLane to target multiple backends, while adapters let Cirq or ProjectQ reach into cloud services managed elsewhere. This cross-pollination reduces lock-in and invites comparative studies that strengthen claims about performance. Education is the other engine of growth. Well-written notebooks, curriculum-aligned modules, and active community forums have thrown a wider net, onboarding students, data scientists, and domain specialists who would not otherwise wade through niche academic code. The field gains when newcomers can understand a variational loop or a QUBO formulation in a day rather than a semester.

Use Cases That See Early Traction

Chemistry and materials science continue to attract serious attention because variational eigensolvers fit current device limits. Frameworks offer libraries and workflows that take molecular Hamiltonians and produce ansätze tuned to shallow circuits, then optimize parameters under noise-aware simulation before sending jobs to actual hardware. The distance between textbook equations and runnable circuits has narrowed: with Qiskit or PennyLane, chemists can probe reaction energetics or model small active spaces, learning where device noise changes outcomes and where classical preconditioning pays off. Even when results match classical baselines, the exercise clarifies resource needs for larger-scale breakthroughs.

Optimization remains a two-track story. Ocean SDK provides a disciplined path to map routing, scheduling, or portfolio allocation into QUBO formulations suited to annealers, sometimes with immediate operational utility when problem sizes and connectivity align. In parallel, gate-model stacks pursue variational optimization routines that rely on cost functions and parameterized circuits, pushing small- to medium-scale instances through simulators and cloud devices. Financial risk balancing, supply chain adjustments, and energy dispatch have all served as proving grounds where teams compare annealing-based heuristics against hybrid classical-quantum loops, learning which formulations survive the move from elegant theory to noisy execution.

Hardware Realities and Technical Hurdles

The constraints of NISQ hardware shape everything from circuit style to compiler design. Limited qubit counts restrict the size of encodings and the complexity of entanglement patterns, while finite coherence times force circuits to be shallow or heavily mitigated. Error rates vary across gates and qubits, making placement and routing nontrivial; a good transpiler can salvage performance by threading circuits along higher-fidelity paths or by inserting dynamical decoupling where it helps. Even measurement error, often overlooked by newcomers, needs modeling and correction to produce stable results. Frameworks have grown more transparent about these realities by exposing device calibrations and letting users control compilation passes.

Fault-tolerant error correction remains the looming challenge. Codes that promise logical qubits require significant overhead, and the engineering burden grows quickly with target fidelity. In the meantime, error mitigation fills the gap—a basket of techniques that rescale, extrapolate, or otherwise compensate for errors without fully correcting them. Cirq and Qiskit support such workflows through noise-injection simulation and custom passes, while PennyLane users experiment with gradient-aware mitigation. The net effect is pragmatic: developers design for today’s limits, and the frameworks stabilize expectations by making noise and topology non-negotiable inputs to algorithm design.

Open Source Advantages and Community Dynamics

Open repositories transform quantum software from isolated prototypes into shared infrastructure. Contributors add device models, transpiler passes, and reference implementations of algorithms, allowing users to stand on each other’s shoulders rather than start from scratch. Standards emerge not by fiat but by practice: canonical circuit representations, metadata schemas for backends, and naming conventions that make code discoverable. This accelerates reproducibility, a critical need in a field where subtle differences in noise modeling can swing results. With transparent code and tests, errors are caught earlier and claims are easier to verify.

The community effect also fuels specialization. Startups extend frameworks with domain-specific modules—for finance, logistics, or drug discovery—while educators craft courseware that reaches far beyond research institutions. Interoperability work reduces the cost of experimentation by letting teams switch devices or simulators without rewriting entire stacks. Importantly, open source attracts talent; contributors gain visibility and experience that translate into research opportunities and jobs. The ecosystem thus becomes a talent engine and a knowledge commons, pushing advances in compilation, benchmarking, and hybrid orchestration faster than any single organization could manage alone.

Guidance for Teams Choosing a Stack

Selecting a framework is less about brand loyalty and more about problem fit, available hardware, and engineering style. Teams exploring broad gate-model development with steady community support often start with Qiskit, especially when IBM backends are in scope. Researchers focused on NISQ realism, calibration-informed studies, or careful benchmarking frequently pick Cirq for its device-oriented abstractions. Those building hybrid machine learning pipelines gravitate toward PennyLane because it treats quantum nodes as native citizens of TensorFlow, PyTorch, or JAX, enabling gradient-based training across the full stack without awkward glue code.

Hardware alignment matters as well. Forest SDK remains compelling for hands-on development targeting Rigetti devices, where Quil and quilc provide tight control over compilation and mapping. For optimization framed as QUBO or Ising, Ocean SDK delivers a prescriptive path that shortens the distance to annealing hardware and operational use cases. ProjectQ suits classrooms and rapid iteration when minimal setup and clean abstractions trump ecosystem breadth, yet it can still reach IBM backends if needed. Whatever the choice, planning for cloud-native, asynchronous workflows and investing in both quantum concepts and solid software engineering practices will pay dividends as experiments grow and benchmarks become more rigorous.

Spotlight On Qiskit And Cirq

Qiskit’s appeal lies in its breadth and its stability. The interplay between Terra and Aer covers the lifecycle from circuit design to noise-aware testing, while backend integration exposes calibration data and runtime controls. Tutorials and application modules guide newcomers through chemistry, optimization, and quantum machine learning use cases without hiding the need to think carefully about topology and depth. As teams mature, they can customize transpiler passes, integrate external optimizers, and automate job management across simulators and devices, building repeatable pipelines that feel familiar to classical engineers.

Cirq, by contrast, invites a laboratory mindset. Device graphs, gate definitions, and noise channels are explicit, enabling experiments that probe where fidelity breaks and how mitigation helps. Hybrid loops are still straightforward, but the prevailing goal is to produce results that reflect the hardware’s character rather than an idealized abstraction. This stance resonates with benchmarking consortia and research groups who must compare devices or validate algorithmic claims under realistic conditions. For those stakeholders, Cirq’s insistence on modeling nuance is not overhead—it is the experiment.

Spotlight On PennyLane, Forest, Ocean, And ProjectQ

PennyLane made differentiable programming a central paradigm for quantum work. By integrating with mainstream ML frameworks, it lets users define quantum nodes whose parameters participate in gradient descent alongside classical layers. That single design choice reshaped quantum machine learning from a bespoke art into an extension of everyday ML practice. Researchers can test ansätze, try hardware-in-the-loop training, and evaluate generalization without abandoning familiar tooling. The result is faster iteration and clearer comparisons to classical baselines.

Forest SDK focuses on developers who want close contact with Rigetti hardware and compilation research. With pyQuil for program construction and quilc for fast, hardware-aware compilation, it emphasizes practical mapping and execution through cloud services. This makes it a strong fit for studies where compilation choices drive performance and for teams building workflows that rely on superconducting qubits. Ocean SDK, meanwhile, is optimized for annealing: it encourages problem formulations that match the hardware’s strengths and offers a straight line from real-world optimization tasks to QUBO/Ising models. ProjectQ balances the ecosystem as a minimalistic, modular framework suited to classrooms and quick proofs of concept, while retaining the option to aim at IBM devices when projects move beyond the whiteboard.

Interoperability, Cloud, And Hybrid Momentum

Cloud-delivered hardware access is now table stakes. Frameworks expose APIs to submit jobs, query calibration data, and retrieve results asynchronously, which enables larger experimental campaigns and collaborative studies across institutions. Hybrid orchestration—mixing classical HPC, ML libraries, and quantum backends—has matured into standard practice as job queues, cost controls, and usage analytics align with enterprise expectations. This normalization matters: it moves quantum work from ad hoc scripts to manageable pipelines that can be versioned, audited, and reproduced.

Interoperability is the quiet force multiplier. Backends and plugins let a single codebase exercise different devices or simulators, minimizing sunk cost and bias in comparisons. That flexibility encourages genuine bake-offs among algorithms, mitigators, and compilers, refining community intuition about what helps in practice. It also creates a healthier market. Users can select devices that match a problem’s structure without uprooting their software, while vendors meet developers where they already are by offering compatible interfaces rather than demanding full-stack rewrites.

Education, Skills, And Organizational Readiness

Adoption hinges not only on code but also on people. The most effective teams blend quantum intuition with strong software engineering discipline: version control, testing, data pipelines, and performance profiling. Frameworks reduce barriers, yet the learning curve remains steep without structured pathways. Here, open curricula and hands-on notebooks have been decisive, enabling self-paced learning that bridges physics-heavy concepts and accessible programming patterns. As skill sets spread, organizations can move beyond pilots and design experiments that measure value against business metrics.

Strategic readiness also includes expectation management. Near-term wins will be domain-specific and often hybrid by design. Benchmarks should compare against strong classical baselines and justify the engineering cost of quantum components. Frameworks support this realism by making it easy to swap simulators for devices, turn noise on and off, and log metrics that capture both quality and cost. Teams that build this rigor into their practices avoid hype cycles and generate findings that hold up under scrutiny, which in turn attracts support for the next wave of work.

The Road Ahead For Quantum Software Builders

The next phase will test how well the ecosystem converts research momentum into sustained, scalable practice. Improvements in qubit quality and compiler sophistication will lift ceilings on circuit depth, while advances in error mitigation and early error correction will shift the cost-benefit calculus for certain algorithms. Frameworks are already preparing by exposing richer device models, supporting longer-running hybrid jobs, and standardizing artifacts so results can be shared and reproduced across institutions. As interoperability tightens, cross-framework comparisons will become more routine, clarifying where annealing, gate-model, or hybrid approaches offer the best trade-offs.

For practitioners, the path forward involves operational discipline. Treat quantum jobs like any other distributed workload: monitor queues, track spend, and collect telemetry that informs decisions. Lean into differentiable programming where learning signals exist and into structured optimization where QUBO fits naturally. Keep code portable, and keep assumptions explicit. The ecosystem has matured enough to reward those habits with faster iteration and more credible claims. As frameworks continue to evolve, the teams most likely to benefit will be those that match problem shape to hardware reality and let the data guide their choices.

From Prototype To Practice

The momentum around open-source quantum frameworks had pointed toward tangible next steps rather than abstract promises. Teams that scoped problems to hardware constraints moved faster by using noise-aware simulators first, then shifting to cloud devices only when results justified the run. Differentiable pipelines and QUBO mappings provided repeatable routes from idea to benchmark, while interoperability reduced the cost of exploring alternatives. With documentation, examples, and community support, onboarding had accelerated and broadened participation beyond physics labs, adding software engineers and domain experts to the mix. In that environment, practical wins were achieved by aligning algorithms with device strengths, codifying experiments as reproducible workflows, and treating hybrid orchestration as standard engineering rather than novelty.