The relentless growth of artificial intelligence workloads is pushing the boundaries of traditional data infrastructure, with global data generation expected to reach staggering levels in the coming years due to AI-driven applications. Centralized cloud models, once heralded as the ultimate solution, are buckling under the strain of escalating costs, unpredictable performance, and stringent regulatory demands. This creates a pressing need for innovative approaches to handle the massive computational requirements of AI while ensuring efficiency and compliance.

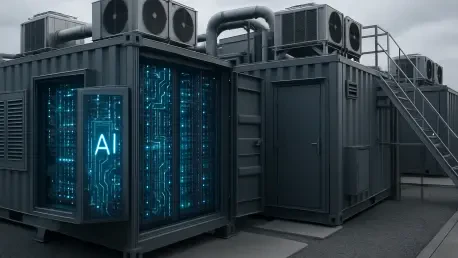

Edge computing has emerged as a transformative answer, shifting processing closer to data sources to minimize latency and enhance control. Modular data centers, with their adaptable and scalable designs, stand at the forefront of this shift, offering a way to address operational bottlenecks, financial unpredictability, and legal constraints. These compact, prefabricated units are redefining how organizations deploy infrastructure for AI, making rapid implementation possible even in unconventional locations.

This discussion focuses on sustainability, data sovereignty, and operational efficiency as pivotal drivers behind the adoption of modular solutions. By exploring these key areas, the guide aims to provide actionable insights into how such infrastructure can support AI’s unique demands while aligning with environmental and regulatory priorities.

Why Modular Data Centers Matter for AI

Artificial intelligence applications require infrastructure capable of delivering low latency, predictable costs, and robust performance to handle intensive training and inference tasks. Traditional cloud setups often fall short, with egress fees and performance variability hindering seamless operation. Modular data centers present a compelling alternative by enabling localized processing that reduces delays and offers greater financial transparency.

The advantages of these systems extend beyond speed and cost. Their rapid deployment capabilities allow organizations to establish AI-ready infrastructure in a fraction of the time required for conventional builds, while scalability ensures adaptability to fluctuating workloads. Energy efficiency is another critical benefit, as modular designs often incorporate advanced cooling and power management to minimize environmental impact, alongside enhanced data control that supports compliance with local laws.

Sustainability and sovereignty are not just added perks but essential components for meeting modern business and regulatory expectations. As AI continues to permeate industries like healthcare, education, and government, the ability to maintain eco-friendly operations and secure data within jurisdictional boundaries becomes paramount. Modular solutions offer a pathway to balance technological advancement with these pressing obligations.

Key Strategies for Implementing Modular Data Centers

Adopting modular data centers as edge solutions for AI workloads demands a strategic approach that encompasses deployment, integration, and sustainable practices. These systems are not merely physical structures but comprehensive ecosystems designed to optimize performance at the edge. By focusing on practical steps, organizations can harness their potential to transform AI infrastructure.

The following strategies delve into critical aspects such as speed of setup, technology optimization, and regulatory adherence. Each area is supported by real-world examples that demonstrate the tangible impact of modular solutions across diverse sectors. These insights aim to guide decision-makers in navigating the complexities of edge computing for AI.

Rapid Deployment and Flexibility in Infrastructure

Modular data centers excel in their ability to be deployed swiftly in varied environments, from urban parking lots to remote rural areas. Companies specializing in these solutions have pioneered prefabricated units that can be operational within months, bypassing the lengthy timelines associated with traditional construction. This speed is crucial for organizations needing to launch AI services under tight deadlines.

The prefabricated nature of these units also significantly cuts down on embodied carbon by reducing the need for extensive on-site building materials like concrete. Their flexible design supports diverse power configurations, ensuring compatibility with local grid conditions or renewable energy sources. Such adaptability makes them ideal for dynamic AI applications where infrastructure must evolve with demand.

Case Study: Hospital AI Service Launch

A notable example involves a hospital that leveraged a modular data center to roll out AI-driven diagnostic services in record time. By installing the unit in an adjacent lot, the facility achieved low-latency data processing, directly enhancing patient care through faster and more accurate analyses. This rapid implementation, completed within just a few months, underscores the transformative potential of modular systems in time-sensitive sectors.

Optimizing Data Storage and Energy Efficiency

Efficient data storage is a cornerstone of sustainable AI operations within modular environments. Advanced file systems that use techniques like erasure coding can drastically reduce data bloat, minimizing storage overhead and associated costs. This approach ensures that vast datasets, common in AI workloads, are managed without unnecessary resource drain.

Energy efficiency is equally vital, with ultra-low-power hardware playing a pivotal role in cutting consumption and cooling requirements. By integrating such technology, modular data centers can operate at a fraction of the energy cost of traditional setups, aligning with broader sustainability goals. This dual focus on storage and power optimization is essential for long-term operational viability.

Example: University Research Facility in Africa

In a remote African university, a modular data center equipped with cutting-edge storage solutions enabled sustainable AI research despite limited resources. The system’s low-power design drastically reduced energy needs, allowing the facility to rely on local renewable sources. This setup not only supported advanced studies but also set a benchmark for eco-conscious infrastructure in challenging environments.

Ensuring Data Sovereignty and Compliance

Data sovereignty remains a critical concern for industries handling sensitive information, particularly under strict regulatory frameworks. On-premise modular solutions offer a secure environment where AI training, inference, and storage can occur within defined jurisdictional boundaries, mitigating risks associated with external data handling.

Integrating such infrastructure requires careful planning to ensure compliance with local laws while maintaining operational efficiency. Steps include selecting hardware and software that prioritize data privacy, as well as establishing robust security protocols to protect against breaches. This localized control is indispensable for sectors where trust and accountability are non-negotiable.

Real-World Impact: Government Data Localization

A government agency successfully adopted modular data centers to adhere to stringent data localization mandates. By keeping sensitive information under local jurisdiction, the agency avoided potential legal repercussions while ensuring secure AI processing. This case highlights how modular infrastructure can align technological needs with regulatory imperatives, safeguarding national interests.

Conclusion: The Future of AI with Modular Edge Solutions

Looking back, the exploration of modular data centers revealed their profound impact on addressing the intricate challenges of AI infrastructure. Their ability to deliver rapid deployment, energy efficiency, and data sovereignty stood out as game-changers for diverse sectors. Hospitals, universities, and regulated industries emerged as primary beneficiaries, having gained the tools to navigate latency, cost, and compliance hurdles with unprecedented agility.

For organizations contemplating this transition, the next step involves a thorough evaluation of hybrid models that blend cloud and edge capabilities to suit specific needs. Prioritizing sustainability in every decision, from hardware selection to power sourcing, remains crucial for long-term success. Partnering with integrated solution providers can streamline this journey, offering expertise and reducing implementation complexities.

As a forward-looking consideration, staying ahead in the AI landscape will depend on embracing continuous innovation within modular frameworks. Exploring emerging technologies and fostering collaborations will ensure that infrastructure evolves alongside AI demands. This proactive stance promises not only operational resilience but also a competitive edge in an increasingly data-driven world.