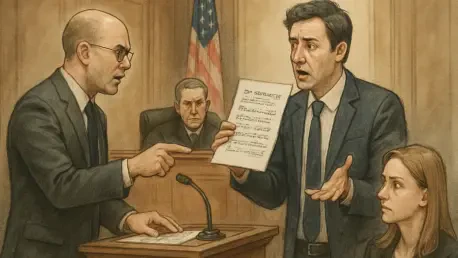

In an era where technology permeates every facet of professional life, the legal field is grappling with a startling challenge as attorneys increasingly turn to open-source AI tools for assistance in case preparation, only to face severe repercussions when these systems produce flawed or fabricated outputs. These AI-generated errors, often termed “hallucinations,” have led to citations of nonexistent legal precedents and fictitious court rulings in submissions, undermining the integrity of the judicial process. With courts imposing sanctions on lawyers for such mistakes, the stakes have never been higher. The growing reliance on tools like ChatGPT and Claude has sparked a critical debate about the balance between technological innovation and ethical responsibility in law. This issue is compounded by privacy concerns and the potential breach of attorney-client privilege when sensitive data is entered into unsecured systems. As the legal profession navigates this uncharted territory, the risks tied to AI misuse are becoming alarmingly clear.

The Perils of AI-Generated Errors in Legal Practice

The phenomenon of AI hallucinations is not a minor glitch but a significant threat to the credibility of legal work, as evidenced by hundreds of documented cases where attorneys have submitted inaccurate information generated by open-source AI models. Research from legal scholars reveals that in the United States alone, a staggering number of incidents—over 240—have been recorded, showcasing fabricated case law or incorrect legal interpretations making their way into court filings. Such errors can result in sanctions, damaged reputations, and even the loss of practicing licenses. Beyond the embarrassment of citing imaginary precedents lies a deeper issue: the erosion of trust in the judicial system when technology fails to deliver reliable results. Experts warn that high-stakes areas like criminal prosecutions or Social Security benefits demand precision that current open-source AI tools often cannot provide. The legal community must confront the reality that dependence on unverified AI outputs could jeopardize not just individual cases but the profession’s standards as a whole.

Navigating Privacy Risks and Ethical Boundaries

Another pressing concern surrounding AI use in legal settings is the risk to client confidentiality, as entering sensitive information into open-source platforms could potentially expose identifiable data, violating the sacred principle of attorney-client privilege. Legal professionals are cautioned that such breaches might not only compromise individual cases but also invite severe ethical repercussions from regulatory bodies. Unlike controlled AI systems designed specifically for legal applications, which incorporate safeguards like encrypted prompts, many open-source tools lack robust protections against data leaks. Industry leaders stress that while technology can enhance efficiency, the ultimate responsibility for maintaining privilege rests with the attorney. As courts grow increasingly intolerant of AI-related missteps, the call for comprehensive education on responsible AI use has gained momentum. Training programs focusing on understanding the limitations and ethical implications of these tools are seen as essential steps forward to prevent past oversights from recurring in future practice.