Imagine a world where artificial intelligence responds to complex queries in a fraction of the time it currently takes, slashing costs without compromising on the precision of its answers. This isn’t a distant dream but a tangible reality being shaped by a cutting-edge approach known as Speculative Cascades. Large Language Models (LLMs), the backbone of modern AI applications like chatbots, search engines, and coding assistants, often grapple with the dual challenges of high computational costs and slow response times during inference—the process of generating outputs from user inputs. Speculative Cascades emerges as a hybrid innovation, ingeniously combining existing techniques to optimize LLM performance. By addressing the inefficiencies that have long hindered scalability, this method is generating significant excitement within the AI research community. It promises a smarter, faster way to deploy AI at scale, ensuring that the technology becomes more accessible for diverse applications. This article delves into the intricacies of this breakthrough, exploring the challenges it tackles, the mechanisms behind its success, and the broader implications for the future of AI systems.

Unpacking the Core Challenge of LLM Inference

The primary hurdle with LLMs lies in the inference phase, where the model processes vast datasets to deliver accurate responses, often at the expense of considerable time and energy resources. As these models are increasingly integrated into everyday tools, the demand for efficient processing has never been more critical. The computational burden not only drives up operational costs but also limits the ability to deploy LLMs in real-time scenarios where speed is paramount. Existing strategies to mitigate these issues, such as cascades and speculative decoding, have offered partial solutions but come with inherent limitations that prevent them from fully resolving the problem. Speculative Cascades steps into this gap, proposing a hybrid framework that aims to harmonize the competing demands of speed, cost, and output quality, setting a new benchmark for what’s achievable in AI optimization.

This challenge is compounded by the sheer scale at which LLMs operate today. With billions of parameters to compute, even a slight delay in response time can accumulate into significant inefficiencies when handling millions of queries daily. The financial implications are equally staggering, as the energy and hardware required to sustain such operations can be prohibitively expensive for many organizations. Speculative Cascades offers a potential lifeline by rethinking how models prioritize and delegate tasks during inference. Instead of relying on a single approach, it leverages a combination of methods to ensure that resources are allocated more intelligently, paving the way for broader adoption of LLMs across industries hungry for AI-driven solutions.

Decoding the Mechanics of Key Techniques

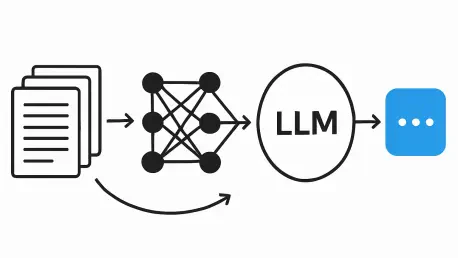

At the heart of understanding Speculative Cascades lies a grasp of its foundational components—cascades and speculative decoding. Cascades operate on a tiered system where a smaller, less resource-intensive model initially attempts to answer a query. If the smaller model is confident in its response, it provides the output directly, conserving the computational power that a larger model would demand. However, if confidence is low, the task is handed over to a more capable model, which starts the process anew. This sequential nature, while cost-effective for straightforward queries, introduces delays when deferral occurs, as the larger model must begin from scratch, negating some of the intended efficiency gains.

Speculative decoding, on the other hand, focuses on reducing latency through parallel processing. In this method, a smaller “drafter” model generates a sequence of tokens that a larger “target” model verifies simultaneously. If the draft aligns with the larger model’s expectations, multiple tokens are accepted at once, speeding up the response time significantly. Yet, this approach falters when even a single token mismatches, leading to the rejection of the entire draft and a restart from the corrected point. While it guarantees output identical to the larger model, the rigidity of this token-by-token verification often undermines potential computational savings. Speculative Cascades builds on these techniques by integrating their strengths, introducing a dynamic decision-making process that avoids the pitfalls of sequential delays and strict rejections.

The Innovation Behind the Hybrid Approach

Speculative Cascades distinguishes itself by merging the tiered processing of cascades with the parallel verification of speculative decoding, while adding a crucial layer of flexibility through customizable deferral rules. Unlike the all-or-nothing stance of speculative decoding, this hybrid method evaluates the smaller model’s output on a token-by-token basis, deciding in real time whether to accept the draft or defer to the larger model. This granular approach minimizes wasted effort, as it avoids restarting the entire process for minor discrepancies and sidesteps the sequential bottlenecks inherent in traditional cascades. The result is a system that can adapt its rigor based on the complexity of the task at hand.

The true ingenuity of this method lies in its ability to tailor decision-making to specific needs. Developers can define the criteria for accepting or rejecting a draft, whether based on confidence thresholds or a cost-benefit analysis of computational resources. For instance, in applications where speed is critical, the system might lean toward accepting drafts with minor stylistic differences, while in precision-driven tasks, stricter standards could be applied. This adaptability ensures that Speculative Cascades isn’t just a static solution but a versatile framework capable of evolving with the diverse demands of AI applications, from quick customer service responses to intricate data analysis.

Empirical Evidence and Performance Gains

The effectiveness of Speculative Cascades isn’t merely theoretical; it’s grounded in rigorous testing that showcases tangible improvements over existing methods. Experiments conducted on prominent models like Gemma and T5 across a range of tasks—spanning summarization, translation, reasoning, and coding—reveal that this hybrid approach achieves superior cost-quality trade-offs. Specifically, it generates a higher number of tokens per call to the larger model while maintaining the integrity of the output, translating to faster response times without sacrificing accuracy. These results highlight the practical value of the method in real-world scenarios where efficiency is non-negotiable.

Further insights from specific benchmarks underscore the strategic advantage of Speculative Cascades. In a mathematical reasoning task, for example, the system demonstrated an ability to reach correct solutions more rapidly by selectively leveraging the smaller model’s contributions for certain tokens, rather than discarding entire drafts due to minor errors as speculative decoding might. This selective acceptance not only accelerates the process but also optimizes resource allocation, ensuring that the larger model is engaged only when absolutely necessary. Such findings position Speculative Cascades as a transformative tool for developers aiming to deploy LLMs in high-stakes, time-sensitive environments.

Adaptability as a Game-Changer

One of the standout features of Speculative Cascades is its inherent flexibility, which allows it to cater to a wide array of application needs. The ability to customize deferral rules means that developers can fine-tune the balance between speed and precision based on the specific context of use. For a chatbot requiring rapid responses, the system might be configured to accept drafts with a lower confidence threshold, prioritizing turnaround time over minute accuracy. Conversely, for a legal or medical application where precision is paramount, stricter criteria can ensure that only the most reliable outputs are accepted.

This adaptability extends beyond mere technical adjustments; it reflects a broader shift toward AI systems that can be molded to fit unique operational goals. As industries increasingly integrate LLMs into specialized workflows, the demand for such customizable solutions grows. Speculative Cascades meets this need by offering a framework that doesn’t dictate a rigid approach but instead empowers users to define their own parameters for success. This level of control could significantly broaden the accessibility of advanced AI tools, enabling smaller organizations or niche sectors to leverage powerful language models without the prohibitive costs typically associated with them.

Reflecting Broader Trends in AI Research

Zooming out from the specifics of Speculative Cascades, a larger trend in AI research becomes evident: the move toward hybrid methodologies that integrate the best elements of multiple techniques. No single optimization strategy can simultaneously address the competing priorities of speed, cost, and quality, often forcing developers to make difficult trade-offs. By combining the tiered efficiency of cascades with the latency reduction of speculative decoding, Speculative Cascades exemplifies how blending approaches can yield more comprehensive solutions that tackle multifaceted challenges.

This trend aligns with the industry’s broader push for scalable AI systems that can be deployed in diverse, real-world applications without breaking the bank. The emphasis on customizable frameworks also signals a growing recognition of the varied needs across different sectors. As AI continues to permeate fields ranging from education to finance, solutions like Speculative Cascades pave the way for tools that can be tailored to specific demands, rather than adhering to a one-size-fits-all model. This shift toward adaptability and integration is likely to shape the trajectory of AI development in the coming years, fostering innovations that are both powerful and practical.

Balancing Strengths and Limitations

While Speculative Cascades offers a promising path forward, a balanced perspective on its capabilities and constraints is essential. Traditional cascades excel at conserving resources by deferring only complex queries to larger models, but their sequential processing can introduce significant delays when handoffs occur. Speculative decoding, meanwhile, ensures output fidelity by mirroring the larger model’s results, yet its strict verification process and higher memory demands can offset the intended speed benefits. Speculative Cascades mitigates many of these issues through its dynamic decision-making, but its effectiveness is contingent on the careful design of deferral rules tailored to each task.

This nuanced evaluation extends to the broader landscape of LLM optimization, where every approach carries inherent trade-offs. The hybrid nature of Speculative Cascades provides a more flexible middle ground, yet it doesn’t eliminate the need for strategic planning in implementation. Developers must weigh the specific requirements of their applications—whether prioritizing cost savings, response speed, or output precision—against the strengths and weaknesses of available methods. This complexity underscores that optimizing AI inference remains an intricate puzzle, demanding ongoing innovation and careful consideration of diverse operational contexts.

Looking Ahead: Shaping the Future of AI Efficiency

Reflecting on the advancements brought by Speculative Cascades, it’s clear that this hybrid method marks a significant step forward in addressing the persistent challenges of LLM inference. Its ability to blend cost-saving tiered processing with latency-reducing parallel verification has a profound impact on how developers approach AI optimization. By offering a framework that balances speed, cost, and quality through adaptable rules, it opens doors to more efficient deployment of language models across various domains. As a result, applications that once struggled with resource constraints find new opportunities for integration.

Moving forward, the focus should shift to refining these deferral mechanisms and exploring how they can be automated or enhanced with machine learning to further reduce human oversight. Additionally, expanding empirical studies to cover a broader range of models and tasks could solidify the method’s applicability in niche areas. Collaboration between researchers and industry practitioners will be key to scaling these innovations, ensuring that the benefits of faster, smarter AI inference reach a global audience. The journey toward truly efficient LLMs continues, with hybrid approaches like this one lighting the path toward sustainable and impactful AI solutions.