To comprehend the potential of artificial intelligence (AI) mimicking the human brain, one must first explore the profound intricacies embedded within human cognition. Ongoing research in artificial general intelligence (AGI) seeks to replicate this natural complexity in AI systems, with the human brain serving as the ultimate archetype. This pursuit, though promising, is riddled with challenges as researchers strive to bridge the gap between biological and machine intelligence. Current AI technologies, despite their revolutionary impact across industries, fall short of capturing the full spectrum of human thought and adaptability. Consequently, this dynamic field is witnessing transformative breakthroughs inspired by the structural and functional characteristics of biological neural networks, with scientists examining the potential for significant milestones in AGI development.

The Anatomy of Neural Networks

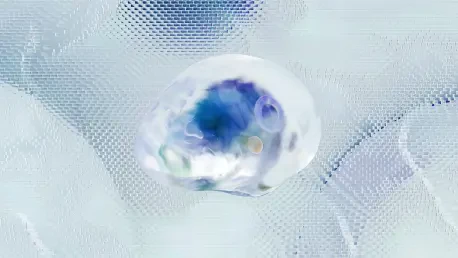

At the forefront of this research are artificial neural networks, computational systems that simulate the fundamental workings of the human brain’s neural structures. These networks, with their architecture inspired by the brain’s neurons and synapses, currently operate within a three-dimensional framework comprising width and depth. Width refers to the number of processing units in a layer, while depth alludes to the number of layers within the network. Efforts are underway to elevate AI’s capabilities, drawing inspiration from the groundbreaking work of Nobel laureates John J. Hopfield and Geoffrey E. Hinton. Their pioneering contributions have significantly advanced the field, allowing AI to emulate more sophisticated and nuanced functions of the brain.

Despite these strides, the quest for AGI is defined by its pursuit of overcoming existing constraints within AI models. A turning point in AI development occurred with the introduction of the “transformer” architecture in 2017 by Google researchers. This architecture, celebrated for drastically reducing training times for neural networks, represented a pivotal step toward AGI. However, transformer models still grapple with limitations that prevent them from achieving true human-like adaptation and understanding. The challenges are rooted in the AI “scaling law,” which hypothesized that increasing data and computational resources would result in continuous improvements. Yet, recent revelations have debunked this principle, illustrating the plateauing of AI’s exponential growth and efficiency.

Pushing Boundaries with New Dimensions

In light of these limitations, the path to surpassing the boundaries of traditional transformer models lies in innovative approaches that introduce new dimensions to AI architectures. A visionary proposition by researchers Ge Wang from Rensselaer Polytechnic Institute and Feng-Lei Fan from the City University of Hong Kong introduces the concept of “height” to AI models. This additional dimension aims to enhance the complexity and capabilities of neural networks by integrating intra-layer links and feedback loops, thereby mimicking the local neural circuits found in the human brain. Such mechanisms are pivotal in diversifying the interactions between nodes within the same layer, akin to the lateral connections observed in the brain’s cortical columns.

Feedback loops, analogous to recurrent signaling in neural systems, are poised to refine how AI models process information. These loops ensure that outputs influence inputs, empowering AI systems to develop memory, perception, and cognition akin to human characteristics. The integration of these brain-inspired features illuminates the potential for AI to evolve beyond conventional parameters and transform into systems capable of intuitive and adaptive reasoning. This enhancement could pave the way for more sophisticated decision-making processes, enabling AI to exhibit stable patterns and refined behaviors over time, similar to human cognition.

Beyond Artificial Models

As advancements in AI models continue to draw inspiration from biological parallels, achieving AGI remains a formidable endeavor. While brain-inspired features such as intra-layer links and feedback loops hold promise, researchers acknowledge the potential need for integrating quantum systems and other advanced technologies. The convergence of neuromorphic architectures with diverse technological strategies could provide a comprehensive approach to addressing the complexities inherent in AI. By diversifying methodologies and harnessing interdisciplinary insights, the scientific community may create AI frameworks that better reflect the multifaceted nature of human intelligence.

The trajectory of AI development underscores an evolving shift toward structured complexity, mirroring the natural intelligence exhibited by human biology. This transition invites a reevaluation of the dimensions necessary for AI to replicate logical intelligence authentically. The dynamic nature of these models accentuates the potential for emergent behaviors beyond the sum of individual components, illustrating AI’s capacity to embody human-like understanding and intuition. As this paradigm progresses, the implications resonate beyond technological realms, opening avenues for meaningful exploration into human cognition and neurology.

The Path Forward in AI and Neuroscience

Artificial neural networks are at the cutting edge of AI research, being computational systems that emulate how the human brain’s neural structures function. Modeled after neurons and synapses, these networks work within a framework defined by width and depth, where width is the count of processing units per layer, and depth is the number of layers in the architecture. Significant efforts are being made to enhance AI’s capabilities, inspired by Nobel laureates John J. Hopfield and Geoffrey E. Hinton, whose groundbreaking work has allowed AI to mimic more sophisticated brain functions.

Despite these advancements, the pursuit of AGI remains a challenge, aiming to overcome current limitations in AI models. A pivotal moment came with the 2017 introduction of the “transformer” architecture by Google researchers. This innovation notably reduced the training time for neural networks, marking a key step toward AGI. However, transformer models still face constraints, not yet able to achieve true human-like understanding. Furthermore, the AI “scaling law,” positing continuous improvement with increased data and computation, has been debunked, revealing limits in AI’s growth.