The central question facing organizations is no longer if they should adopt artificial intelligence, but precisely where they should deploy their AI workloads to achieve the maximum strategic return on investment. As AI transitions from an experimental technology to a core business function, understanding the trade-offs between centralized cloud power and decentralized edge processing has become a critical financial exercise. This decision point, or the strategic “tipping point,” is determined by a dynamic framework that weighs the costs and benefits of each environment. Mastering this calculation is the key to transforming AI infrastructure from a significant expense into a powerful, value-driving asset that delivers measurable outcomes across the enterprise. It requires a nuanced perspective that moves beyond a one-size-fits-all approach and instead tailors deployment strategies to the specific demands of each application, ensuring that every dollar invested in AI is strategically allocated for optimal performance and impact.

The Hidden Burdens of a Cloud-Only Strategy

Relying exclusively on the cloud for all AI tasks, especially the real-time decision-making stage known as inference, can introduce substantial and often overlooked financial drains that erode an investment’s value. A primary issue is the “volume trap,” where applications that generate massive amounts of data at the edge, such as raw 4K video from surveillance systems or high-frequency telemetry from Industrial IoT sensors, incur immense and recurring data egress fees from hyperscale providers. The cost of continuously sending this data to a distant cloud server for processing can quickly become prohibitive. Beyond these explicit charges, the high-volume data transfer consumes vast network bandwidth, leading to congestion that creates a hidden operational cost by introducing delays and increasing management complexity. This constant data movement represents a significant flaw in a cloud-centric strategy, turning a potential asset into a persistent and costly liability that scales negatively with operational growth and data generation.

The limitations of a cloud-centric AI strategy extend beyond mere financial costs and into the realm of quantifiable business risk, primarily through the “latency penalty.” The unavoidable delay introduced by the physical distance between a data source and a centralized cloud data center is not just a technical inconvenience; for mission-critical applications, it represents a direct cost of non-performance that can have catastrophic consequences. An autonomous vehicle, for example, cannot afford a 500-millisecond delay in processing sensor data to detect an obstacle, as this time lag translates directly into a safety and liability cost. Similarly, a real-time fraud detection system loses its effectiveness if the processing delay results in a poor customer experience at the point of sale. This latency is an inherent characteristic of centralized processing and creates a fundamental performance ceiling that many critical AI applications cannot afford to operate under, making the cloud an unsuitable environment for tasks requiring immediate action.

Unlocking Value at the Edge

Shifting AI inference workloads closer to where data is generated unlocks strategic advantages that are structurally impossible for the centralized cloud to provide, particularly in the areas of data governance and security. By processing sensitive information locally, either on the device itself or on a nearby server, edge computing ensures that private or proprietary data never has to leave a secure perimeter. This architectural choice is a powerful tool for adhering to strict data sovereignty regulations like GDPR and drastically simplifies the complexities of compliance management. It significantly mitigates the risk of data breaches that can occur during transit over public networks and, just as importantly, enhances customer and stakeholder trust by demonstrating a commitment to keeping personal information secure. For industries like healthcare, finance, and retail, where data privacy is paramount, this capability is not just a benefit but a foundational requirement for deploying AI responsibly and effectively.

Beyond governance, edge AI systems deliver a degree of operational resilience that is critical for applications where continuous functionality is non-negotiable. Because they are designed for offline functionality, edge deployments can continue to run inference, analyze data, and make crucial decisions even during network outages or when faced with intermittent connectivity to the cloud. This guarantees zero downtime and ensures uninterrupted value delivery in challenging environments. In a manufacturing plant, for instance, a predictive maintenance model monitoring critical machinery must function instantaneously to prevent catastrophic equipment failure, regardless of the factory’s internet connection status. This operational continuity is directly enabled by the ultra-low latency inherent in local processing, making the edge the only viable platform for systems that demand unwavering reliability and immediate response to maintain safety, efficiency, and profitability.

Embracing the Hybrid AI Lifecycle

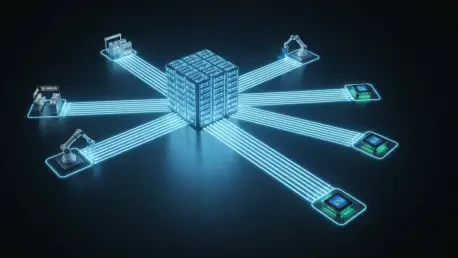

The persistent “edge versus cloud” debate has ultimately revealed itself to be a false dichotomy, as the most effective and cost-efficient strategy is not to choose one over the other but to embrace a dynamic, two-stage hybrid AI lifecycle. This sophisticated approach leverages the distinct strengths of each environment by assigning different phases of the AI development and deployment process to the platform best suited for the task. The first stage, model training, remains the undisputed domain of the cloud. The computationally intensive process of developing large, complex, and highly accurate deep learning models requires access to the massive, elastic GPU clusters and vast data processing capabilities that only hyperscale cloud data centers can offer. The cloud serves as the indispensable core where petabytes of training data are ingested and analyzed to forge the foundational intelligence of powerful AI models, making it the essential starting point for any serious AI initiative.

Once a model is fully trained in the cloud, the hybrid lifecycle enters its second stage: deployment and inference at the edge. In this phase, the completed model is optimized, compressed, and distributed to the edge devices where real-world applications run. This deployment strategy is designed to bring decision-making as close as possible to the point of action, ensuring that responses occur in sub-seconds and operational integrity is maintained. By processing data locally, this approach virtually eliminates the exorbitant data transfer costs and network congestion associated with a cloud-only model. Furthermore, it keeps sensitive data secure and allows operations to continue uninterrupted, even without a stable connection to the cloud. It is at the edge where the AI model delivers its tangible value directly, transforming its learned intelligence into immediate, impactful outcomes that drive business performance and create a competitive advantage.

A Framework for Strategic Deployment

The decision of where to run an AI inference workload was ultimately determined by identifying its primary strategic priority, revealing the tipping point where the benefits of one environment decisively outweighed the other. This choice was governed by a framework that prioritized one of three key factors: speed, scale, or compliance. Workloads requiring a near-instantaneous response for safety or operational integrity, such as autonomous vehicle obstacle avoidance or real-time credit card fraud detection, found the edge to be the only viable option due to its ultra-low latency. In contrast, workloads involving massive, non-time-critical batch processing or the aggregation of data from numerous sources for deep analysis, like retraining fleet-wide models or analyzing national sales trends, benefited from the cloud’s centralized power and immense scale. Finally, applications dealing with sensitive, regulated data or generating massive raw data streams, such as in-store video surveillance or patient data analysis, relied on the edge to provide the necessary privacy, security, and cost control. This framework enabled organizations to move from fragmented AI spending to a cohesive, value-driven infrastructure where every dollar was strategically allocated to achieve measurable business outcomes.