A strategic hiring decision involving a single open-source developer has sent a clear message throughout the technology sector, signaling a fundamental pivot from developing smarter conversationalists to engineering autonomous artificial intelligence that can execute complex, real-world tasks. OpenAI’s recruitment of Peter Steinberger, the creator of the popular AI assistant OpenClaw, marks a pivotal moment in the industry’s evolution. This move underscores a multibillion-dollar shift in focus, where the ultimate prize is no longer just a model that can think or write, but one that can act. Steinberger is now tasked with leading OpenAI’s ambitious initiative to build what its leadership calls “the next generation of personal agents,” a clear declaration of the new competitive frontier.

This development is more than a simple talent acquisition; it is a validation of the growing market demand for AI that moves beyond generating text and images to performing tangible actions. OpenClaw, despite being a nascent project with known security issues, captured the developer community’s attention, accumulating over 145,000 stars on GitHub and proving the immense appetite for AI agents. As noted by Sanchit Vir Gogia, chief analyst at Greyhound Research, this project exists at the critical intersection “where conversational AI becomes actionable AI.” It represents the technological leap from AI that can help you draft a plan to an AI that can carry it out on your behalf, fundamentally changing how humans interact with digital environments.

From Drafting to Doing The Rise of AI That Can Act on Your Behalf

The core innovation driving this new wave of technology lies in its ability to interact directly with a user’s desktop environment, much like a human would. Unlike traditional Robotic Process Automation (RPA) tools that rely on brittle, pre-programmed scripts, actionable AI agents can see and understand a screen. They can perform tasks such as clicking on interface elements, navigating between applications, and completing web forms with a degree of adaptability that was previously unattainable. This contextual understanding allows them to continue functioning even when user interfaces change, a common failure point for older automation systems.

For an entrepreneur like Peter Steinberger, who has a track record of building and selling successful companies, the decision to join a tech giant was a strategic one. He acknowledged that achieving the grand vision of “truly useful personal agents” that can handle genuine work requires infrastructure and resources available to only a handful of global corporations. His role at OpenAI provides the necessary scale and backing to pursue this goal. In a move that respects the project’s origins, OpenClaw will not be absorbed and dissolved. Instead, it will continue as an independent open-source project, governed by a new foundation financially supported by OpenAI, ensuring its development continues for the community that championed it.

Deconstructing the New Arms Race The Players the Technology and the Prize

The hiring of Steinberger intensifies an already fierce competition among major AI labs to dominate the agentic AI landscape. The focus of this new arms race has shifted from the underlying foundational models to the sophisticated “runtime orchestration” layer that commands them. This critical layer manages the complex coordination of multiple models, the integration of external tools, and the maintenance of persistent memory and context. It is here, in the ability to effectively manage and deploy agents, that the next market leaders will be determined.

This emerging arena is already crowded with formidable players. Microsoft is making significant investments in multi-agent systems through its AutoGen framework and the broader Copilot ecosystem, aiming to embed autonomous capabilities across its entire software suite. Meanwhile, Anthropic is pushing the boundaries of computer interaction with its Claude model, and Google’s Project Astra signals a future of ambient, multimodal assistance seamlessly integrated into daily life. Each of these tech giants is building its own ecosystem for deploying AI agents, turning the orchestration layer into the primary battleground for innovation and market share.

A Reality Check The Sobering Statistics and Expert Warnings on AI Agents

Despite the industry’s enthusiasm and strategic investments, the reality of enterprise adoption paints a more cautious picture. Current research from Gartner reveals that only 8% of organizations have deployed AI agents in production environments. This significant gap between hype and implementation is largely due to challenges in reliability and security. The complexity of making agents consistently successful is a major hurdle that developers are now grappling with.

One of the most significant obstacles is a mathematical one: the exponential failure rate of multi-step processes. Even an agent that performs individual actions with 95% reliability will see its overall success rate for a 13-step task fall below 50%. The probability of failure compounds with each additional step, making complex workflows exceedingly difficult to execute flawlessly. Anushree Verma, a senior director analyst at Gartner, posits that overcoming this will necessitate the creation of an “agentic brain”—a highly sophisticated system capable of creating, managing, and troubleshooting complex workflows autonomously.

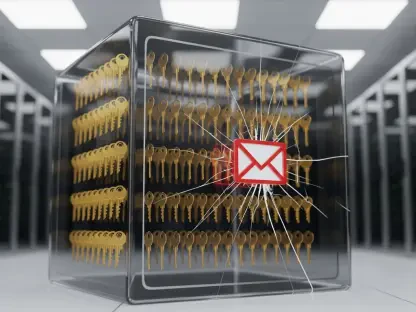

Furthermore, the very capability that makes these agents so powerful—their ability to take direct action on a user’s system—also makes them a profound security risk. An autonomous agent with privileged access becomes a prime target for threats like prompt injection, where malicious instructions can trick the AI into performing unauthorized actions. The potential for damage is magnified significantly compared to a simple chatbot, creating a new and challenging security minefield for organizations to navigate.

The Enterprise Playbook Navigating the Promises and Perils of Agentic AI

For enterprises looking to harness the power of agentic AI, success will depend on building a robust governance framework that treats these autonomous systems with the same rigor as human employees. This begins with establishing role-based access controls to limit what an agent can do and see, ensuring it only has access to the information and systems necessary for its designated tasks. Comprehensive audit logging is also essential, creating a transparent record of every action the agent takes for accountability and troubleshooting.

Moreover, a critical component of this framework must be the implementation of human-in-the-loop checkpoints, especially for high-stakes actions. Any task involving financial transactions, sensitive data modification, or interaction with regulated systems should require mandatory human approval before execution. While OpenAI’s decision to maintain OpenClaw as an open-source project provides a degree of transparency, it does not absolve enterprises of the need to build their own guardrails.

The path forward for agentic AI left several critical questions unanswered. The industry awaited clear timelines for integrating these advanced capabilities into commercial products and sought concrete strategies for fortifying the security vulnerabilities inherent in such powerful technology. As development accelerated, the focus shifted from demonstrating potential to delivering reliable, secure, and accountable solutions that enterprises could trust. The journey to truly actionable AI had only just begun, but its direction was irrevocably set toward a future where software could not only assist, but act.