Industrial safety buyers face a paradox that has quietly stalled innovation: systems are excellent at telling site leaders where people are, yet they struggle to say what those people are doing and whether they are protected, and the gap between presence and understanding keeps incidents too close for comfort. This divide matters in environments where seconds determine outcomes and budgets determine adoption. As spending shifts from pilots to production, decision-makers are demanding solutions that detect risk in real time without exploding cloud bills or breaching privacy norms.

The market is converging on a clear thesis. Vision AI now identifies PPE compliance and unsafe motion reliably, while IoT platforms hold identity, permissions, equipment state, and environmental data. The bottleneck is no longer model accuracy; it is the cost and latency of running vision in the cloud and ferrying video across networks. Moving inference to cameras and local gateways, then fusing outputs with site context, is transforming economics and responsiveness, turning credible proofs of concept into durable programs.

This analysis examines the market’s pivot from cloud-first to edge-first safety intelligence, quantifies the cost curve, and maps the demand signals reshaping vendor roadmaps. It also outlines practical deployment patterns, regulatory expectations, and investment priorities that separate scalable winners from pilots that never progress.

How the market evolved and why cloud created a bottleneck

For roughly two decades, RFID badges, geofences, and proximity sensors have anchored occupational safety strategies. These tools deliver reliable identity and coordinates, which automate access control and trigger basic alerts when someone crosses a line. However, they rarely answer the pressing questions: is a worker wearing high-vis apparel, should that person be near an active lift, and are vehicles actually moving in that corridor at this moment.

Computer vision stepped in to fill the interpretation gap, bringing PPE detection and behavior understanding to jobsites, plants, and yards. Early pilots validated that models can perform in real-world conditions with varied lighting and clutter. Yet when pilots scaled, cloud-first designs drove up per-camera inference costs, storage fees, and egress charges for incident reviews. The result was predictable: solid technical performance paired with budgets that ballooned as cameras multiplied.

Latency compounded the problem. Pushing video to distant data centers introduced two to five seconds of delay under good network conditions. In high-risk zones, those seconds are the line between a prevented near miss and a recorded incident. Buyers learned an expensive lesson: cloud is a great control plane for model management and analytics, but it is a poor place for the hot path of inference and alerting.

Economics, latency, and convergence are redefining demand

The cost-latency trap and why deployments stalled

The per-camera-hour pricing used by many cloud inference services breaks down in multi-camera, multi-site estates. A typical jobsite with about 30 cameras at modest resolution produces terabytes of video per month, pushing ongoing inference and storage into thousands of dollars monthly per location. Add egress for investigations and long-term retention, and a mid-sized portfolio can face six-figure annual outlays that are hard to justify against incident reduction targets.

Latency is not merely an inconvenience; it erodes the value proposition. Even when tuned for efficiency, cloud round trips commonly add seconds to the detection-to-alert path. Forklift zones, crane lifts, and confined-space entries cannot absorb that delay. Stakeholders have responded with stricter performance benchmarks that prioritize sub-second local decisioning and minimal data movement.

Edge inference changes the math. Running models on intelligent sensors and low-cost gateways collapses bandwidth from continuous streams to compact event messages. Cloud GPU bills disappear for the hot path, and on-site storage needs shrink because systems store metadata-first and capture short clips only when policy thresholds are crossed. The resulting total cost of ownership aligns with operational budgets rather than innovation funds.

From detections to decisions: convergence with IoT reshapes value

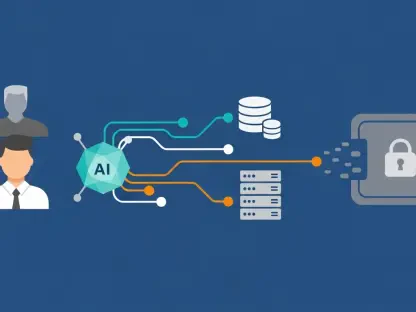

Edge performance alone does not close the loop; the market premium accrues to platforms that fuse vision outputs with contextual signals in real time. IoT tags offer identity without facial recognition, role- and task-based permissions clarify who should be where, equipment sensors expose motion and load, and environmental monitors reveal air quality and other hazards. Together, these inputs elevate a single detection into actionable situational awareness.

A forklift corridor illustrates the new standard. As a person steps into the zone, an edge camera flags missing high-vis apparel; a proximity reader captures the worker’s tag; the system checks that person’s authorization; equipment telemetry confirms multiple forklifts moving nearby. Within hundreds of milliseconds, a local audio cue addresses the worker, nearby operators receive prioritized alerts, and a foreman sees a context-rich notification that includes zone, risk, and status. The escalation path reflects policy and history: repeat behaviors prompt coaching or temporary restrictions, while first-time incursions drive gentle interventions.

Data minimization underpins adoption. In-sensor analytics from intelligent imagers and single-board AI cameras now extract detections inside the device pipeline, emitting only structured events, timestamps, and hashed identifiers. Site-wide data volume drops from terabytes to tens of megabytes per month, easing privacy concerns and simplifying compliance while keeping the evidence needed for training and audits.

Field realities, regional nuances, and misconceptions that move markets

Deployment lessons from active sites reveal practical constraints and commercial opportunities. In regions with strict privacy regimes, buyers prefer metadata-first pipelines that store video only when policy thresholds trigger. In hot or dusty environments, hardened enclosures and thermal management become gating items for device selection. Night operations require models tuned for low light and IR, shaping vendor differentiation on data pipelines and augmentation.

A common misconception is that identity means facial recognition. In practice, tags, zoning, and correlation offer strong identity linkage without faces, aligning with cultural expectations and regulatory pressure. Another overlooked point is complementarity across modalities. Cameras have blind spots outside their fields of view; geofence triggers can nudge nearby cameras to reframe. Environmental sensors detect gases or particulates but not human exposure; vision corroborates presence and location to focus interventions.

Market messaging is catching up to these realities. Buyers now evaluate solutions based on fusion capabilities and governance posture, not just model benchmarks. Vendors that package edge orchestration, standardized event schemas, and integration with workforce systems see faster cycles from proof to rollout.

What is next for platforms, connectivity, and governance

Several vectors are shaping near-term competition. Edge-first platforms are becoming default, with intelligent image sensors and low-cost accelerators delivering on-device inference at a fraction of cloud costs. Private 5G and Wi‑Fi 6/6E strengthen local backhaul for event traffic and over-the-air model updates, while containerized runtimes on gateways simplify deployment, rollback, and health monitoring across fleets.

Multimodal fusion is settling in as the baseline architecture. Vision, tags, equipment telemetry, and environmental sensing combine to produce graded, role-aware responses rather than one-size-fits-all alarms. Policy engines increasingly adapt severity using historical patterns and task context, reducing alert fatigue and targeting coaching where it has the most impact. The cloud’s role is clarifying as the control plane for model lifecycle management, cross-site learning, and enterprise integrations, rather than the hot path for inference.

Governance is moving from reactive to proactive. Vendors that enable metadata-first designs, explicit retention windows, and auditable decision logs are aligning with regulators and worker councils. Standardized schemas for safety events are emerging, which lowers integration friction and unlocks cross-site analytics. From 2025 to 2027, buyers can expect steady consolidation around platforms that prove sub-second response, minimize data movement, and demonstrate trustworthy automation.

Operator playbook for scalable adoption

Enterprises are reframing business cases around leading indicators rather than only recordable incidents. Near-miss detections, alert precision, and response latency now feature in success metrics, linking directly to training workflows and supervisor coaching. This shift aligns incentives: fewer false alarms and faster, context-aware interventions deliver measurable productivity and safety gains.

The winning deployment pattern is consistent across sectors. Run PPE and behavior models on sensors or local gateways, orchestrate correlation with tags, equipment, and environmental data at the edge, and reserve the cloud for model distribution, cross-site analytics, and compliance reporting. Identity is fused through tags and access systems to avoid facial recognition wherever possible, and storage policies favor events and short clips tied to explicit thresholds.

Program durability depends on fleet management and model upkeep. Secure boot, signed updates, and remote diagnostics keep the edge estate reliable. Models require periodic validation and retuning for new PPE, lighting, and layouts. Hardware selections reflect environmental stressors and maintenance capabilities. With these foundations, organizations scale from single-site pilots to portfolios without a step change in cost or complexity.

The bottom line for buyers and builders

The analysis showed that cloud-only vision struggled to meet the economic and latency demands of continuous safety monitoring, even when model accuracy was strong. Edge inference and data minimization reset the cost curve and cut alert times to the thresholds safety teams needed. Value increased further when vision signals were fused with identity, permissions, equipment state, and environmental context to drive graded, role-aware responses.

Successful strategies favored an edge-first architecture with the cloud as a control plane, standardized event schemas for interoperability, and governance by design. Vendors that proved sub-second response, reduced data movement, and embedded policy controls gained clear commercial advantage. For operators, the pragmatic path forward was to anchor programs in leading indicators, invest in fleet management, and keep models current with site realities.

Next steps pointed to targeted pilots that measure alert precision and response latency, rapid expansion to high-risk zones once thresholds were met, and steady integration with workforce management to align permissions and tasks. As budgets shifted from experiments to operations, the market rewarded solutions that delivered perception plus context locally, at scale, and at a predictable cost.