The modern digital enterprise generates an overwhelming torrent of security data, a reality that has pushed traditional cybersecurity models to their breaking point. In this high-volume environment, security teams are often caught in a reactive cycle, waiting for massive datasets to be ingested, indexed, and processed by Security Information and Event Management (SIEM) systems or data lakes before they can even begin to hunt for threats. This inherent delay creates a critical vulnerability gap, a window of opportunity where adversaries can operate undetected while defenders are still organizing their data. The trade-off between comprehensive visibility and manageable operational costs has become untenably steep, forcing many organizations to choose what data to ignore. However, a fundamental shift is underway, moving analysis from a delayed, post-ingestion chore to a real-time, in-stream capability that promises to close this gap and redefine the speed and efficacy of threat detection in the cloud.

A New Paradigm for Threat Detection

The evolution toward real-time analytics represents a direct response to the limitations of legacy security architectures. Instead of treating data analysis as the final step in a long pipeline, this new approach embeds detection and enrichment directly into the data stream itself, transforming the very nature of security operations.

Tackling the Data Deluge

The core challenge confronting modern Security Operations Centers (SOCs) is the sheer scale of telemetry produced by cloud services, networks, and endpoints. Traditional workflows, which demand that all this raw data be collected and stored before analysis can commence, are both economically and operationally unsustainable. This model introduces significant latency, as the process of ingestion and indexing can take precious minutes or even hours. By the time an analyst can run a query, a sophisticated threat may have already achieved its objectives. This “data explosion” forces a difficult compromise: either pay exorbitant costs for storing and processing vast amounts of low-value data or selectively log events, creating dangerous blind spots. This reactive posture leaves security teams perpetually one step behind, sifting through historical data for clues rather than proactively identifying threats as they emerge, a fundamental inefficiency that the in-stream approach aims to eliminate entirely.

A strategic partnership between Abstract Security Inc. and Netskope Inc. exemplifies the industry’s pivot toward solving this data-centric problem. The collaboration integrates high-fidelity data from Netskope’s Secure Service Edge (SSE) platform directly into Abstract’s adaptive data pipeline. This architecture bypasses the traditional ingest-then-analyze sequence by performing “in-motion analytics.” As data flows from its source, it is immediately inspected for indicators of compromise, anomalous patterns, and policy violations. This method not only accelerates the mean-time-to-detection (MTTD) from hours to seconds but also fundamentally changes the quality of data that downstream systems receive. By bringing detection capabilities to the data stream, organizations can shift from a costly, retrospective analysis model to a proactive, real-time security posture better suited for the dynamic nature of modern cloud environments.

The Mechanics of In-Motion Analytics

The value of this innovative model lies in its ability to not just identify but also enrich security events in real time, providing analysts with actionable context at the moment of detection. This transforms raw data points into meaningful security intelligence before they are even stored.

The Abstract platform’s core function is to analyze the data flow from Netskope’s log streaming service as it moves through the pipeline, identifying potential threats without the need for prior indexing. More importantly, it enriches this streaming data with critical context from various sources. Information such as user identity, geographic location, and up-to-the-minute threat intelligence is appended to relevant events in real time. This process turns a simple log entry into a rich, multidimensional security event. For an analyst, this means receiving an alert that already contains the “who, what, where, and why” of a potential incident. This immediate contextualization eliminates the need for manual correlation and research, a time-consuming task in traditional SOC workflows, thereby allowing security teams to make faster, more informed decisions and significantly shortening the response lifecycle.

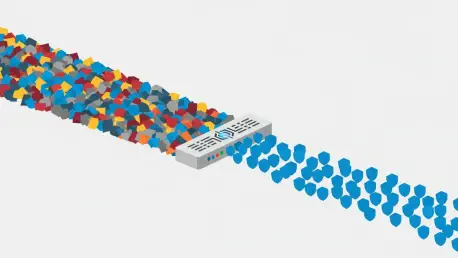

Furthermore, this in-stream approach enables dynamic and intelligent routing of security data. Instead of flooding a SIEM or data lake with terabytes of unfiltered logs—much of which is redundant or low-value—the system intelligently filters the stream. Only the enriched, high-fidelity security events deemed relevant are routed to these expensive downstream systems. This has a profound financial and operational impact. It drastically reduces data storage and ingestion costs, a major pain point for enterprises, by ensuring that analytical tools are fed only the most valuable information. Simultaneously, this pre-processing step significantly lowers the volume of false positives that analysts must investigate. By curating the data in-motion, the system enhances the signal-to-noise ratio, allowing SOC teams to focus their efforts on genuine threats and move away from the alert fatigue that plagues so many security operations.

Redefining Security Operations and Value

The shift to real-time, in-stream analysis offers more than just speed; it presents an opportunity to fundamentally re-architect security operations for greater efficiency, higher return on investment, and a more resilient and proactive defense posture.

From Operational Overhead to Strategic Advantage

The operational benefits of this model extend throughout the security lifecycle. By providing immediate visibility into risks as they materialize, it dramatically accelerates the entire detection and response process. The transformation of raw telemetry into actionable intelligence at the pipeline level streamlines SOC operations, freeing analysts from the burdensome tasks of data normalization and manual enrichment. This newfound efficiency allows teams to focus on higher-value activities like threat hunting, incident response, and strategic security planning. The result is a more agile and effective security organization, one that can replace a fragmented stack of legacy tools with a single, adaptive streaming layer. This consolidation not only simplifies the security architecture but also provides a measurable return on investment by optimizing resource allocation and enhancing the overall security posture of the organization.

The financial case for adopting in-stream analytics is compelling and multifaceted. The most immediate benefit is the significant reduction in costs associated with data ingestion and storage in SIEMs and data lakes. By intelligently filtering and routing only high-value, contextualized security events, organizations can curb the exponential growth of their security data budgets without sacrificing visibility. This targeted approach ensures that expensive analytical platforms are utilized for their intended purpose—analyzing credible threats—rather than serving as costly repositories for raw data. Over time, this optimized data flow enhances the return on investment for the entire security stack. It improves the efficiency of both human analysts and automated tools, leading to faster and more accurate threat containment, which in turn minimizes the potential financial impact of a security breach.

A Conclusive Shift in Security Strategy

Ultimately, the move toward real-time, in-stream security analytics marked a definitive turning point in how organizations approached data-driven defense. The integration of advanced processing capabilities directly into the data pipeline addressed the foundational inefficiencies that had long plagued cloud security. This evolution was not merely an incremental improvement; it represented a fundamental re-architecting of security workflows. Organizations that adopted this model found they could overcome the crippling trade-offs between cost and visibility. By analyzing, enriching, and filtering security telemetry in motion, they transformed their defensive posture from reactive to proactive, ensuring that critical threats were identified and contextualized the moment they appeared. This paradigm shift equipped security teams with the speed and intelligence required to effectively defend the modern, hyper-distributed enterprise perimeter.