Quantifying the value of an internal developer platform remains one of the most complex challenges for modern engineering organizations, as simplistic metrics often fail to capture the nuanced reality of developer experience and productivity. The John Lewis Partnership (JLP) provides a compelling case study in navigating this complexity, showcasing a deliberate evolution from a reliance on raw, objective data to a sophisticated, multi-faceted measurement strategy. This journey highlights a critical shift in perspective: recognizing that the true measure of a platform’s success lies not just in quantitative outputs, but in a deep, contextual understanding of how it empowers engineers, fosters best practices, and drives desired organizational outcomes. By combining objective performance indicators with systematic qualitative feedback and behavioral analysis, JLP developed a holistic framework that transformed data from a mere reporting tool into a powerful enabler of continuous improvement for its engineering teams. This strategic pivot offers valuable insights for any organization seeking to move beyond superficial dashboards and build a measurement system that genuinely reflects and enhances the value of its developer ecosystem.

The Problem with Purely Objective Data

The JLP platform team initially adopted widely recognized objective metrics, including the DORA framework, to assess the performance and impact of their internal developer platform. However, this quantitative approach quickly revealed significant limitations and potential “traps.” The core issue stemmed from the fact that objective data, when viewed in isolation, is highly susceptible to misinterpretation because it lacks the necessary context to tell a complete story. For instance, a notable decline in a team’s performance metrics could be easily misconstrued as a problem with their workflow or efficiency. In reality, the dip might have been caused by a temporary reassignment of the team to a different product, a strategic decision completely unrelated to their technical capabilities. Similarly, a metric tracking incident resolution time could be skewed by simple procedural errors, such as an engineer forgetting to close an incident ticket promptly, which would not accurately reflect the actual time to recovery but would negatively impact the data. These examples demonstrated that relying solely on numbers created a distorted view of reality, leading to potentially flawed conclusions and misguided interventions.

This dependency on context-deficient data led to a significant scaling challenge that threatened the viability of the measurement strategy. When the platform served only a small number of development teams, the platform team could overcome the limitations of the data through direct, high-touch engagement. They could engage in conversations to uncover the context behind the numbers, understand the unique circumstances of each team, and interpret the metrics correctly. However, as the platform’s user base expanded to encompass several dozen teams, this manual, conversational approach became entirely unsustainable. The sheer effort required to maintain a deep understanding of each team’s situation created an insurmountable operational bottleneck. This breakdown in communication rendered the objective data increasingly less useful for making informed, strategic decisions at scale. It became clear that a new, more scalable method was needed to capture the subjective, context-rich feedback from engineers that was essential for a true understanding of the platform’s value and the developer experience it provided.

The Shift Towards Qualitative Insights

To address the shortcomings of a purely quantitative approach, JLP embarked on a mission to systematically gather and analyze subjective feedback from its engineering community, a process that evolved through two distinct stages. The organization’s first attempt was the creation of “Service Operability Assessments,” which were comprehensive questionnaires distributed quarterly to tenant teams. These assessments were thoughtfully designed to be more than a simple checklist; they posed a series of reflective questions intended to encourage teams to evaluate their adherence to best practices for service operation. Initially, this method showed promise when facilitated by a senior platform engineer who could guide the discussion, ask probing follow-up questions, and extract meaningful, actionable insights. However, this model quickly ran into the same scalability issues that plagued their initial data collection efforts. When the process was converted to a self-service model to accommodate the growing number of teams, its effectiveness plummeted. Teams began to fall into a routine of copying and pasting their responses from the previous quarter, rendering the collected data stale and unreliable as a reflection of their current challenges and practices.

The definitive breakthrough in gathering qualitative feedback occurred when JLP adopted the DX platform, a specialized tool that fundamentally reshaped its entire approach. The methodology employed by the DX platform was a significant departure from their previous attempts. Rather than surveying entire teams periodically, it engaged individual engineers for just a few minutes every three months with a set of scientifically curated questions backed by research from the founders of the DORA framework and other industry experts. This ensured the relevance and efficacy of the feedback collected. The primary advantage of this new platform was its ability to provide sliced and diced data, allowing JLP to analyze feedback across the entire engineering organization, within specific product platforms, or down to the individual team level. This granular insight, when cross-referenced with their objective DORA data, enabled what they termed “rich conversations” and fostered a much deeper, more nuanced understanding of the developer experience. This powerful synergy allowed the platform team to transition from a reactive posture to a proactive one, offering targeted advice and developing new platform features that addressed the specific root causes of a team’s struggles.

Customizing Metrics for Strategic Focus

While the out-of-the-box capabilities of the DX platform provided immense value, JLP further elevated its data-gathering strategy by augmenting the system with custom queries tailored to its unique strategic priorities. This customization allowed the team to zero in on specific areas of interest and gather targeted feedback that informed key business decisions. They began actively measuring Customer Satisfaction (CSAT) to gauge sentiment toward the platform and its Backstage developer portal. Concurrently, they started tracking the onboarding experience by measuring the time it took for new engineers to submit their first pull request and by directly surveying them about the process. Another critical area of focus was the evaluation of AI coding assistants; rather than simply following market trends, JLP used the platform to assess engineers’ opinions on the effectiveness of these tools, thereby building a data-driven business case for further investment. This bespoke approach ensured that development efforts were precisely targeted at areas with a clear, demonstrated need, maximizing the impact of the platform team’s work and aligning it with the real-world challenges faced by developers.

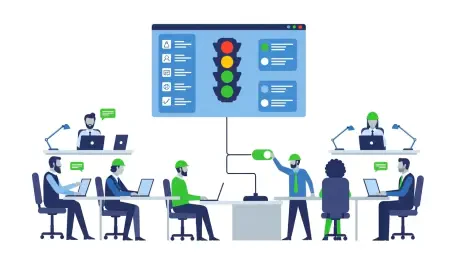

As the developer platform reached a higher level of maturity, JLP’s measurement philosophy underwent another significant evolution. The central question shifted from “Is the platform valuable?” to a more sophisticated inquiry: “Are our tenants deriving the maximum possible value from its features?” This change in focus prompted the development of an advanced, in-house system designed to measure the adoption of specific platform features, which they called the “Technical Health” feature. Implemented as a custom plugin within their Backstage Developer Portal, this system queries an internal API that aggregates data from numerous small, independently deployable jobs. Each job acts as a microservice, designed to collect specific information about a team’s adherence to a recommended practice or their adoption of a particular feature. The results are then presented through intuitive, aggregated views that use a simple traffic-light system (red, yellow, green), complemented by detailed task lists and leaderboards. This design was intentionally crafted to capture the attention of engineers and their managers, creating a powerful visual motivation to improve. JLP discovered that the simple, gamified incentive for an engineer to “turn a traffic-light green” was a far more effective driver of adoption than traditional methods like publishing documentation or making company-wide announcements.

A Mature Framework for Technical Health

The Technical Health feature provides a comprehensive overview of engineering practices by capturing measures across four distinct categories, creating a holistic dashboard for teams and leadership. The first category, “Technical Health,” includes seventeen different measures that track adherence to the “paved road.” This includes ensuring teams are using the standard CI/CD pipeline and the custom Microservice CRD instead of managing their own Terraform, following established Kubernetes best practices like proper resource sizing and disruption budgets, and consistently keeping their base images up-to-date. The second category, “Operational Readiness,” consists of eighteen measures that evolved from an old checklist previously used during handovers between delivery and operations teams. This checklist was adapted to focus on platform-specific features that promote strong operability, such as having a pre-flight configuration, published runbooks for incident response, and current, easily accessible documentation. Together, these categories provide a clear and actionable view of a team’s alignment with organizational standards for both development and operations.

The final two categories of the Technical Health feature demonstrate the system’s flexibility and its role in driving broader organizational initiatives. The “Migrations” category is designed to track the progress of mandatory work required of tenant teams, such as updating to non-deprecated Kubernetes API versions or adopting newer, more secure platform features to phase out older, vulnerable ones. This provides product managers with a clear, quantifiable way to prioritize this essential “keep-the-lights-on” work alongside feature development. Finally, the “Broader Engineering Practices” category was designed to be extensible, allowing other groups within the organization, such as engineering leadership, to contribute their own measures. This has been used to track the adoption of the latest design system versions across front-end applications and to monitor adherence to wider organizational engineering standards that extend beyond the platform itself. This extensibility transforms the feature from a platform-centric tool into a comprehensive engineering excellence dashboard that supports a wide range of strategic goals across the entire technology department.

Key Principles from the Journey

The journey undertaken by John Lewis Partnership revealed that implementing a comprehensive measurement strategy is as much about cultural readiness as it is about technical implementation. It was understood that introducing such detailed assurance measures required a delicate touch, reflecting the maturity of both the platform and the organization’s engineering culture. The “Technical Health” feature was deliberately withheld until the platform was well-established, widely adopted, and broadly perceived as a valuable asset rather than an impediment. Introducing such “guardrails” too early in the platform’s lifecycle could have fostered a perception of the platform as overly restrictive or bureaucratic, which would have risked harming adoption and alienating the very engineers it was designed to support. Despite this careful timing, a small, temporary dip in CSAT was observed after the feature’s launch, an acknowledgment that these checks inevitably create additional work for product teams. To mitigate this impact, JLP remained considerate about the pace at which new measures were rolled out and provided a mechanism for teams to suppress checks that were not relevant to their specific use case, striking a crucial balance between the need for standardization and the flexibility required for innovation.

Ultimately, the organization’s experience in refining its developer platform metrics culminated in a set of core principles applicable to any organization on a similar path. First, they learned that measurement is a journey, not a destination. A successful strategy must be dynamic and adaptable, as the metrics that are critical when first proving a platform’s viability will likely differ from those that matter years later when priorities shift toward stability and managing technical debt. Second, the paramount importance of listening to the human element was affirmed. Quantitative data like usage rates does not equate to value; qualitative feedback is essential. While high CSAT scores are a positive signal, proactively asking engineers about their pain points is the only way to gain actionable insights. Finally, it was established that the ultimate purpose of measurement should be to enable teams to improve, not merely to generate reports for leadership. When data signals a problem, it should be used as a conversation starter to identify and remove roadblocks, building trust and fostering a virtuous cycle of continuous improvement.