Imagine a world where the most complex simulations and cutting-edge AI models are stalled not by a lack of computational power, but by the sluggish pace of data delivery, creating a bottleneck that has long plagued industries reliant on high-performance computing (HPC) and artificial intelligence/machine learning (AI/ML). In these fields, accelerators like GPUs and TPUs often sit idle waiting for data. Google Cloud has stepped in with a game-changing solution through the introduction of the Google Kubernetes Engine (GKE) Managed Lustre CSI Driver. Designed to bridge the gap between compute power and storage speed, this innovation promises to revolutionize data-intensive workloads. By seamlessly integrating with Kubernetes environments, it offers dynamic, high-throughput storage that meets the demands of modern applications. This development marks a significant stride in optimizing resource utilization, ensuring that powerful hardware operates at peak efficiency. As data continues to grow exponentially, such advancements are not just timely but essential for progress in scientific research, financial modeling, and beyond.

Revolutionizing Data Throughput for Modern Workloads

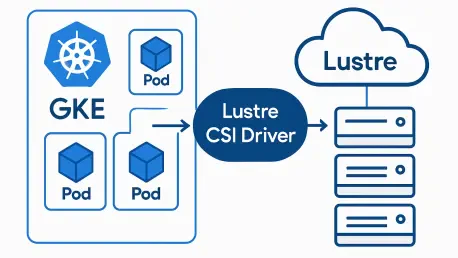

The GKE Managed Lustre CSI Driver stands out as a pivotal tool for addressing the persistent mismatch between compute capabilities and storage performance. Built to integrate with Managed Lustre, a robust parallel file system known for handling massive datasets, this driver enables dynamic provisioning of persistent volumes within Kubernetes setups. Its primary strength lies in delivering exceptional data throughput, which is critical for AI/ML training where rapid data loading can significantly shorten iteration cycles. Similarly, for HPC tasks, it supports the extreme I/O bandwidth needed for intricate simulations. Powered by advanced technology, the driver offers scalable storage options reaching petabytes, with throughput surpassing 100 GB/s per client. This ensures that even the most resource-intensive applications run smoothly, minimizing downtime and maximizing efficiency. Such capabilities make it an ideal choice for industries pushing the boundaries of computational research and data analysis.

Beyond raw performance, the driver brings a level of flexibility that caters to diverse workload needs. With four distinct performance tiers, organizations can tailor storage solutions to match specific requirements, whether for small-scale experiments or large-scale deployments. This adaptability is particularly valuable in fields like pharmaceuticals, where molecular dynamics simulations demand high-speed data access, or in autonomous vehicle development, where processing vast sensor data is a daily challenge. Additionally, compliance with the Container Storage Interface (CSI) standard ensures portability across various Kubernetes distributions, fostering a vendor-agnostic approach. This means enterprises can avoid lock-in and maintain flexibility in their cloud strategies. By simplifying the management of complex storage systems, the driver reduces operational overhead, allowing teams to focus on innovation rather than infrastructure challenges. Its impact on workflow efficiency is already generating buzz among tech communities and industry leaders alike.

Simplifying Complexity in Storage Management

One of the standout features of the GKE Managed Lustre CSI Driver is its ability to streamline the traditionally intricate process of managing Lustre file systems. Historically, such systems required specialized expertise to configure and scale, often posing a barrier to adoption. This new driver automates much of that complexity, enabling seamless integration with GKE clusters through straightforward API enablement and configuration steps. The result is a user-friendly experience that democratizes access to high-performance storage, even for teams without deep technical knowledge of parallel file systems. This automation not only saves time but also has the potential to cut costs by up to 30% in data-heavy environments. For organizations running stateful applications, this translates to faster deployment and more reliable performance, ensuring that critical workloads are not bogged down by storage limitations or manual interventions.

Another key advantage lies in how the driver addresses real-world operational challenges, such as ensuring data security and managing expenses in dynamic environments. Features like encryption at rest provide a layer of protection for sensitive information, which is crucial in shared cloud setups. Meanwhile, tiered pricing models help organizations optimize costs, though careful capacity planning is advised to avoid over-provisioning. The driver’s design also supports efficient checkpointing and rapid output writing, which are essential for iterative processes in AI model development. By reducing idle compute time, it maximizes the utilization of expensive hardware accelerators, offering a tangible return on investment. As industries increasingly rely on Kubernetes for managing AI and HPC infrastructures, this solution positions itself as a cornerstone for scalable, cost-effective data management, paving the way for broader adoption of cloud-native technologies.

Future Horizons in Cloud-Native HPC and AI

Looking ahead, the GKE Managed Lustre CSI Driver is poised to evolve with enhancements that could further solidify its role in cloud-native computing. Planned updates include multi-region support, which would enable seamless data access across geographies, and deeper integration with platforms like Vertex AI for end-to-end machine learning pipelines. These developments reflect a broader trend toward making HPC and AI more accessible in the cloud, breaking down barriers for organizations of all sizes. The driver’s ability to handle massive datasets with ease also aligns with the growing demands of sectors like financial modeling, where real-time data processing can influence critical decisions. As Kubernetes continues to dominate container orchestration, innovations like this driver are expected to play a central role in shaping the future of data-intensive applications, ensuring they keep pace with rapidly advancing computational technologies.

Reflecting on the rollout of this technology, its introduction marked a turning point for many enterprises grappling with storage bottlenecks. The successful deployment in various sectors, from scientific research to autonomous systems, demonstrated its versatility and effectiveness in real-world scenarios. While challenges like cost management and security in shared environments persisted, the built-in features and ongoing updates offered reassurance. Industry enthusiasm underscored a collective recognition of its transformative impact on resource utilization. Moving forward, organizations were encouraged to explore its capabilities through pilot projects, leveraging tiered performance options to match specific needs. Staying informed about forthcoming enhancements, such as expanded regional support, became a priority for maximizing benefits. This driver not only addressed immediate pain points but also laid a foundation for scalable, efficient data management in an era of unprecedented computational demand.