Chloe Maraina is a seasoned expert in business intelligence with a unique talent for weaving compelling stories through the lens of big data. With her expertise in data science and her vision for future data management trends, Chloe provides invaluable insights into the evolution of tech solutions like those of DriveNets. In this interview, she sheds light on the challenges and innovations in AI networking, particularly with DriveNets’ approach to GPU clusters and networking fabrics.

What are the specific challenges associated with connecting GPUs compared to CPUs in AI networking?

Connecting GPUs presents distinct challenges compared to CPUs mainly due to the difference in workloads each handles. GPUs require high-bandwidth, low-latency connections to perform optimally for parallel processing tasks typical in AI applications. This need complicates networking because unlike CPUs, GPUs aren’t just about routing standard data but require robust infrastructure that can manage large data blocks quickly and efficiently without congestion.

Can you elaborate on the progress and growth DriveNets has experienced since its inception in 2015?

DriveNets has shown impressive growth, evolving from a newcomer to a pivotal player in the AI networking sphere. Since 2015, they’ve expanded their customer base significantly, serving major players like AT&T and Comcast. Their strategic focus on enhancing networking fabrics for AI workloads has allowed them to fill a critical gap, providing capabilities tailored to the demands of AI-driven data centers.

How does DriveNets’ Network Cloud-AI platform differ from traditional Ethernet-based networking fabrics?

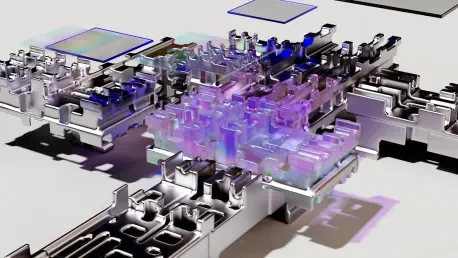

DriveNets’ Network Cloud-AI platform takes a novel approach by using a cell-based fabric architecture rather than the conventional Clos Ethernet. This architecture segments data packets into smaller cells which are distributed across the network and reassembled, helping to streamline data flow and reduce congestion, which is vital for maintaining AI workloads’ efficiency.

What are the benefits of DriveNets’ multi-tenancy and multi-site capabilities for GPU clusters?

The multi-tenancy and multi-site features enable significant flexibility and efficiency. For GPU clusters, this means AI workloads can span multiple sites, lowering the risk of power constraints at a single location and facilitating better resource utilization across geographic areas, even up to 80 kilometers apart. This setup also supports traffic isolation to ensure consistent performance regardless of varying workloads.

How does the cell-based fabric architecture solve power constraints in AI deployments?

By utilizing a cell-based fabric architecture, DriveNets can efficiently distribute workloads across multiple data centers. This reduces the strain on any single power grid, as the network can span multiple locations, each tapping into its own power sources, thereby mitigating individual site power constraints.

Can you explain the concept of a cell-based protocol and how it compares to the conventional Clos Ethernet architecture?

A cell-based protocol divides incoming data packets into smaller, uniformly-sized cells, which are then distributed through the network and reconstructed at their destination. This method enhances load balancing and avoids congestion issues typical with hash-based routing in Clos Ethernet architectures, providing a smoother and more reliable networking solution.

What advantages does DriveNets offer over solutions utilizing Nvidia BlueField DPUs?

DriveNets’ cell-based architecture bypasses the need for specialized hardware like Nvidia BlueField DPUs. Instead, it offers a software-centric solution, potentially reducing costs and complexity while providing efficient load management across the networking fabric, making it easier for organizations to integrate into existing systems.

How does the multi-site capability help organizations overcome power constraints in single data centers?

By extending GPU clusters across multiple locations, DriveNets enables organizations to balance their energy consumption over multiple sites, connecting to different power grids. This not only alleviates the pressure on a single data center’s power supply but also enhances resilience and reduces risks associated with localized power issues.

Can you describe the typical physical implementation of these multi-site GPU clusters?

Typically, a multi-site GPU cluster is connected using high-bandwidth fiber optic links, such as dark fiber or DWDM. These links bundle significant bandwidth, often acting as a single, massive pipeline for data transfer, ensuring continuity and efficiency in data processing across the distributed sites.

How does DriveNets ensure traffic isolation for AI workloads, especially in the context of Kubernetes?

Traffic isolation is essential in multi-tenant environments to ensure fair resource distribution. DriveNets’ architecture inherently provides this isolation through its cell-based design, maintaining workload efficiency even when different Kubernetes containers are running on the same infrastructure, preventing interference from neighboring workloads.

What role does the cell-based fabric play in preventing interference from noisy neighbors?

The discrete path each cell within a packet traces through the network ensures that data destined for one application or tenant doesn’t intrude on another’s bandwidth. This method effectively isolates processes, akin to high-quality service lanes, keeping interference from noisy neighbors at bay.

In what ways is AI integrated within DriveNets’ products and operations?

AI finds a dual purpose in DriveNets’ ecosystem: it is utilized for smarter network management and operation efficiency. By embedding AI within their network control systems, DriveNets optimizes performance and finds quick resolutions to potential issues, enhancing overall operational productivity and service quality.

How has AI-powered network management improved operational efficiency for DriveNets?

AI has substantially uplifted DriveNets’ operational efficiency through its ability to conduct assisted root cause analysis. The model, trained with extensive network logs, anticipates and resolves networking issues swiftly, reducing downtime and allowing the team to focus on optimizing and scaling their services.

What kind of AI tools has DriveNets invested in internally?

DriveNets has robustly invested in AI tools across all departments. This commitment underscores their strategy of embedding AI as a core component in developing both technological solutions and business processes, reflecting their forward-thinking approach to innovation.

How has DriveNets trained its AI model for assisted root cause analysis?

DriveNets’ AI model was trained using an extensive dataset derived from network logs to comprehend dependencies and problem areas. This exhaustive training equips the model with the capability to swiftly conduct root cause analyses, enabling prompt identification and resolution of issues.

Do you have any advice for our readers?

For those looking to navigate the intricate landscape of AI and networking, staying abreast of technological advancements and understanding the interplay between hardware and software is crucial. Embrace the potential of distributed systems and AI to solve complex logistical challenges and drive transformative growth in your endeavors.