Large language models (LLMs) have become a cornerstone of natural language processing (NLP), but their efficiency is often hampered by significant memory demands. These demands, particularly concerning key-value (KV) caches, scale linearly with sequence length, limiting the models’ ability to process extensive context windows effectively. Conventional solutions like sparse attention mechanisms and off-chip storage provide some relief but come with trade-offs such as increased latency or potential information loss. This article explores Tensor Product Attention (TPA), a novel approach designed to tackle these persistent challenges and enhance the performance of LLMs comprehensively.

The Challenge of Memory Efficiency in LLMs

One of the primary obstacles in scaling LLMs is the memory consumption associated with KV caches during inference. As the sequence lengths increase, so does the memory required, which can severely restrict the model’s performance and applicability. Existing methods to mitigate this issue, such as sparse attention mechanisms, often lead to compromises in speed and accuracy. Off-chip storage solutions, while useful, introduce latency and potential information loss, a combination that complicates the efficient operation of LLMs.

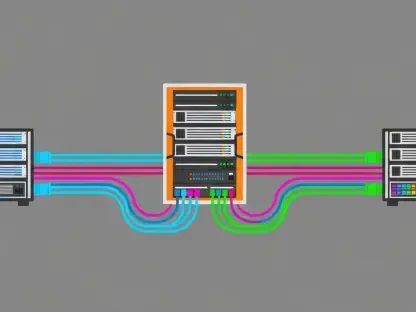

The memory demands create significant bottlenecks, especially for applications requiring extended context windows. Traditional attention mechanisms like multi-head attention (MHA) necessitate a full-size KV cache proportional to the number of heads and their dimensions, thus inflating the memory footprint significantly. Given the escalating needs for processing longer sequences, this memory-intensive approach can hinder the viability of deploying LLMs in real-world scenarios where efficiency and speed are crucial. Consequently, the quest for more memory-efficient solutions has gained substantial urgency.

Introducing Tensor Product Attention (TPA)

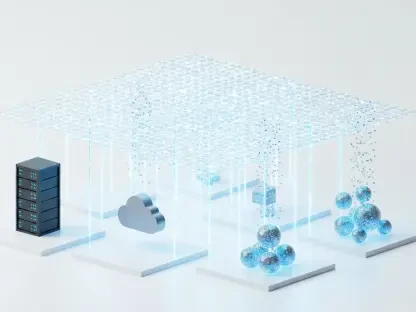

TPA offers an innovative and promising solution by dynamically factorizing queries, keys, and values (QKV) into low-rank components. Unlike static weight factorization techniques like LoRA, which apply the same factorization regardless of the input, TPA generates contextual representations tailored to the specific input data. This approach is achieved by expressing each token’s Q, K, and V components as sums of tensor products of latent factors derived from linear projections of the token’s hidden state. This technique significantly enhances memory efficiency without sacrificing model performance, proving to be a vital leap forward in the evolution of LLMs.

By focusing on dynamic factorization, TPA uniquely addresses the varying demands of different inputs, ensuring that each token is robustly represented. This method leverages linear projections to create composite representations of QKV components, simplifying the complexity involved while maintaining comprehensive expressiveness. The outcome is a reduction in the computational burden while retaining the necessary depth of information, allowing the model to operate effectively within limited memory constraints. This development marks a considerable shift from traditional attention mechanisms and lays the groundwork for more scalable and efficient LLMs.

Integration with Rotary Position Embedding (RoPE)

A unique aspect of TPA is its seamless integration with Rotary Position Embedding (RoPE). Traditional low-rank methods struggle with RoPE due to its reliance on relative positional invariance. TPA overcomes this challenge by pre-rotating tensor components, ensuring efficient caching and inference while preserving positional information. This compatibility with RoPE allows TPA to function as a practical drop-in replacement for multi-head attention (MHA) in widely-used attention-based architectures like LLaMA, leading to the development of the Tensor Product Attention Transformer (T6).

The seamless integration with RoPE is pivotal because it ensures that TPA can be adapted to existing architectures without extensive modifications. Pre-rotating tensor components align with the relative position requirements of RoPE, thereby maintaining operational efficiency and accuracy. This adaptability means TPA can be incorporated into current systems, streamlining the transition towards more efficient models. Consequently, TPA embodies a practical advancement, making it easier to adopt and implement in varied applications requiring scalable and memory-efficient language modeling.

Performance and Memory Efficiency

The memory efficiency brought by TPA is substantial when compared to standard MHA mechanisms, which require a full-size KV cache proportional to the number of heads and their dimensions. In contrast, TPA significantly reduces this requirement by caching only the factorized components. This reduction in memory usage enables the processing of much longer sequences within the same memory constraints, making TPA particularly effective for applications demanding extended context windows, such as lengthy document analysis or real-time language translation.

The reductions in memory requirements also translate into improved speed and responsiveness of LLMs during inference. By optimizing the memory footprint, TPA minimizes the overhead associated with traditional caching techniques, enabling faster data processing and more efficient handling of extensive sequences. This efficiency not only enhances the performance of the model but also broadens the scope of tasks that can be executed within practical memory limits. Ultimately, TPA paves the way for more fluid and robust language models, capable of managing larger datasets and more complex tasks without compromising on speed or accuracy.

Evaluation and Benchmarking

Researchers undertook a comprehensive evaluation of TPA using the FineWeb-Edu100B dataset across various language modeling tasks. The results were compelling, with TPA consistently outperforming traditional attention mechanisms, including MHA, Multi-Query Attention (MQA), Grouped Query Attention (GQA), and Multi-head Latent Attention (MLA). The evaluations indicated faster convergence and lower final training and validation losses, underscoring TPA’s efficiency and accuracy.

For instance, in experiments involving large-scale models with 773 million parameters, TPA demonstrated significantly lower validation losses compared to MLA and GQA. The superior perplexity results across multiple configurations further highlighted TPA’s advantages, confirming its potential to enhance model performance meaningfully. These benchmarks validated the theoretical benefits of TPA, aligning with practical performance improvements, thereby establishing it as a formidable alternative to traditional attention mechanisms in LLMs.

Success in Downstream Tasks

Beyond its impressive pretraining metrics, TPA showcased exceptional performance in downstream tasks such as ARC, BoolQ, HellaSwag, and MMLU benchmarks. In zero-shot and two-shot prompts, TPA ranked among the best-performing methods, achieving average accuracies of 51.41% and 53.12%, respectively, for medium-sized models. These results highlighted TPA’s ability to generalize effectively across diverse language tasks, underlining its potential for broad applicability in various NLP applications.

The ability of TPA to excel in both pretraining evaluations and diverse downstream tasks underscores its robustness and versatility. The consistent high performance across different task types illustrates that TPA can handle a wide range of language processing requirements, from simple queries to complex reasoning challenges. This versatility makes TPA an attractive option for numerous real-world applications, enhancing the feasibility of deploying LLMs across industries and use cases.

Implications for Real-World Applications

Large language models (LLMs) have become fundamental in natural language processing (NLP). However, their efficiency often suffers due to significant memory requirements. Particularly, key-value (KV) caches scale linearly with sequence length, complicating the models’ ability to handle lengthy context windows effectively. Traditional methods like sparse attention mechanisms and off-chip storage offer some solutions but introduce trade-offs such as increased latency or potential data loss.

To address these persistent issues, a novel approach called Tensor Product Attention (TPA) has emerged. TPA is designed to mitigate these memory and efficiency constraints, allowing LLMs to process larger context windows more effectively. By improving how models handle memory demands and the scaling of KV caches, TPA has the potential to enhance overall LLM performance significantly. This article delves into the implications of TPA and how it could revolutionize the efficiency and effectiveness of LLMs, offering a promising solution to the challenges faced by current NLP techniques.